Imagine talking to someone who forgets what you told them ten minutes ago. This is precisely the challenge LLMs face in extended conversations. Despite having large context windows, these models often fail to recall key information from earlier in a conversation, leading to inconsistent and contradictory responses.

This limitation matters tremendously for applications like personal AI companions that need to build rapport by remembering your preferences, or healthcare assistants that must recall your medical history to provide accurate guidance.

A Memory Solution: Recursive Summarization

, researchers have developed a simple yet powerful solution to this problem: teaching language models to create and continuously update their own memory.

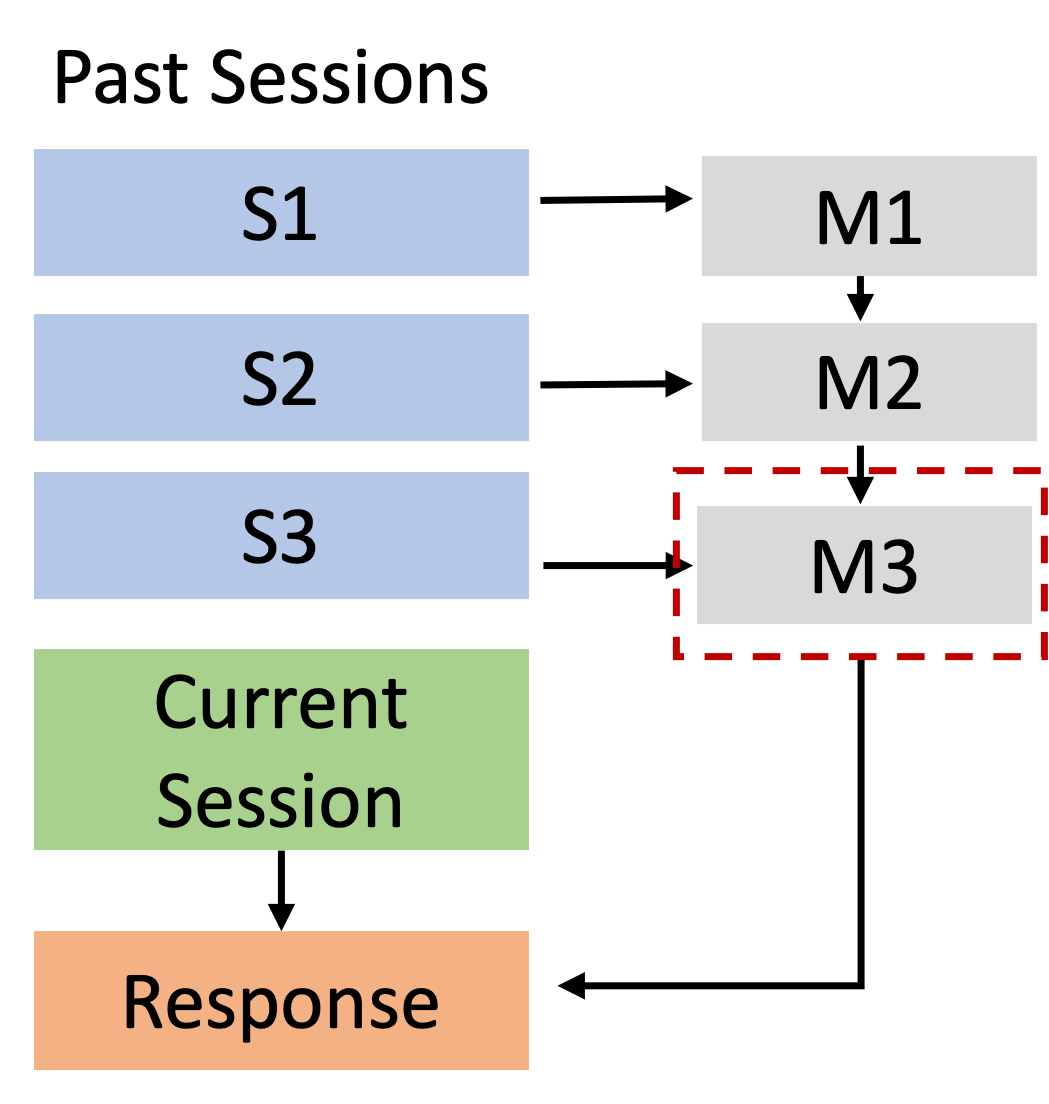

The approach, called recursive summarization, works by:

-

First having the LLM create a brief summary of a short dialogue

-

Then recursively updating this memory by combining the previous summary with new conversation context

-

Finally using this constantly refreshed memory to generate consistent responses

This approach contrasts with existing methods that either:

-

Store the entire dialogue history and rely on the model to parse it all (context-only approach)

-

Use retrieval systems to find relevant past utterances (retrieval-based approach)

-

Create fixed summaries that don’t evolve with new information (traditional memory-based approach)

The key innovation is that memory continuously evolves, incorporating new information while maintaining a coherent picture of the conversation history.

How Recursive Memory Generation Works

Memory Iteration: Building and Updating Dialog Summaries

The recursive memory approach creates a coherent summary of past conversations that updates after each dialogue session. Unlike previous methods that used “hard operations” like append or delete, which fragment the summary, this approach regenerates the entire memory holistically.