According to the adage, “your arbitrage” is my opportunity. This could be used to summarize Meta’s push to build an in-house AI chip for AI training tasks. Reuters Reports indicate that the company has recently begun a small deployment after successfully building the chips in a test with Taiwanese TSMC (sorry Intel). Meta uses its chips to infer or tailor content for specific users, after the AI model is already developed and trained. It plans to use them as training models by 2026.

From article:

Meta’s push to develop its own chips is part a long-term strategy to reduce its massive infrastructure costs, as it places expensive bets in AI tools to drive the company’s growth.

Meta (which also owns Instagram, WhatsApp and other companies) has forecast total expenses for 2025 of $114 billion-$119 billion. This includes up to $65 Billion in capital expenditures, largely driven by AI infrastructure.

According to one of the sources, Meta’s new AI-specific training chip is a dedicated acceleration chip. This can make it more energy-efficient than integrated graphics processing units, which are typically used for AI workloads.

Meta can use the technology even if consumer applications like chatbots are overhyped. Meta can improve content recommendations and ad-targeting. Meta’s advertising revenue is the majority of its revenue, and even small improvements to targeting capabilities can generate billions of dollars of new revenue when advertisers see better results.

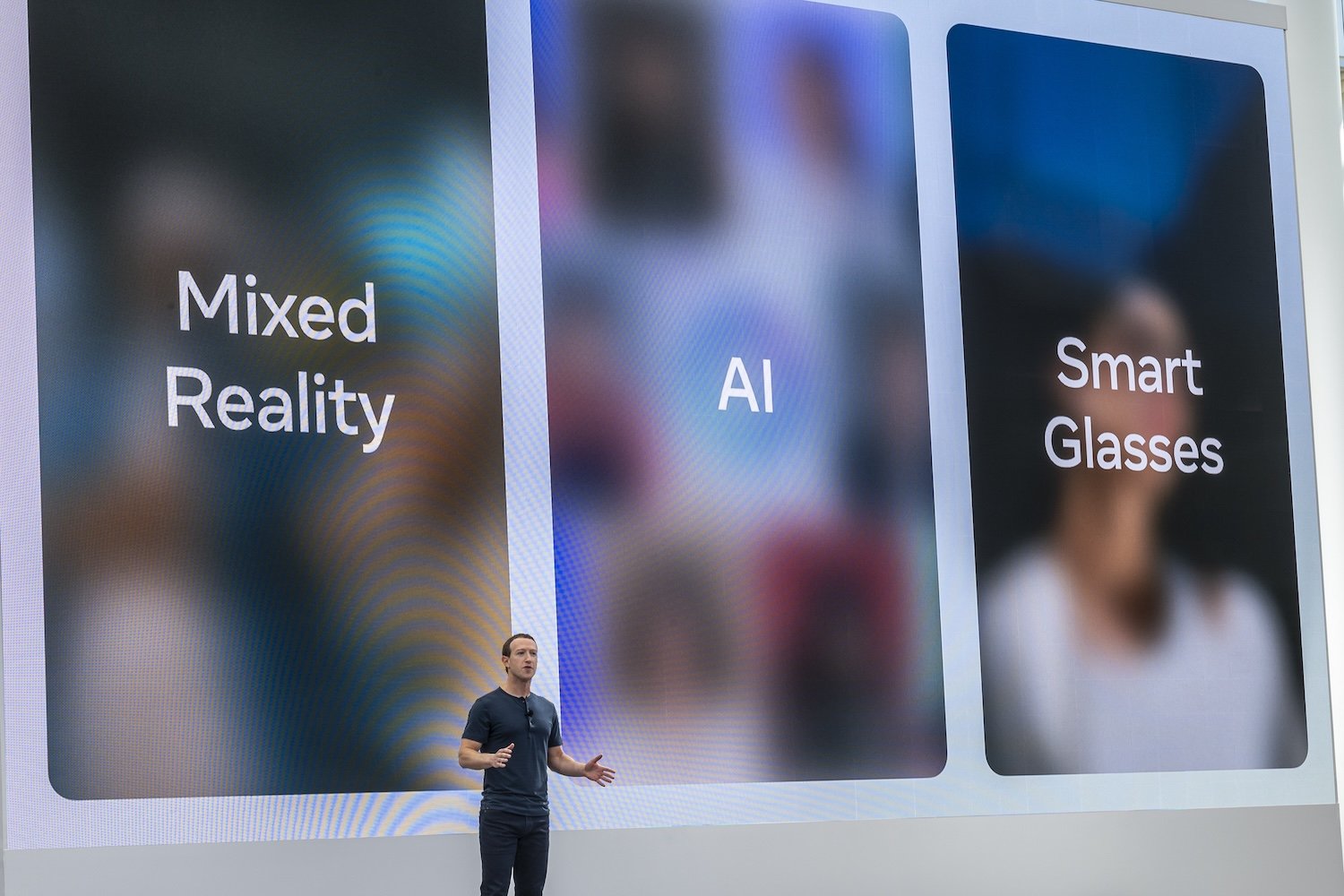

Despite some failures and poor results from Reality Labs, Meta has been able to build strong hardware teams and has seen some successes with its Ray-Ban AI sunglasses. Executives have warned their teams that their hardware efforts have not yet had the impact they hoped for. Meta’s VR head-sets are sold in the low millions of dollars annually. Mark Zuckerberg, CEO of Facebook, has been working to develop its own hardware platforms in order to reduce the company’s reliance on Apple or Google.

Major technology companies have paid Nvidia billions of dollars since 2022 to stock up on its GPUs, which have become the industry standard in AI processing. Nvidia, which has some competitors like AMD, has been praised for not only offering the chips themselves, but also the CUDA toolkit to develop AI applications.

Nvidia reported late last year that nearly half of its revenue came from four companies. All of these companies are building chips to cut out the middleman, reduce costs and wait for many years before they see a return. Investors will only tolerate heavy expenditure for so long before they demand Meta prove that it pays off. Amazon has its Inferentia chip, while Google has developed the Tensor Processor Units (TPUs), for years.

Nvidia’s concentration on a few customers who are building their processors, as well as the rise of efficient AI models such China’s DeepSeek have raised some concern about whether Nvidia could keep up its growth for ever. However, CEO Jensen Huang said he was optimistic that data centers will spend $1 trillion in the next five year building out infrastructure. This could see his company grow into the 2030s. Of course, most companies won’t be able develop chips as Meta can.