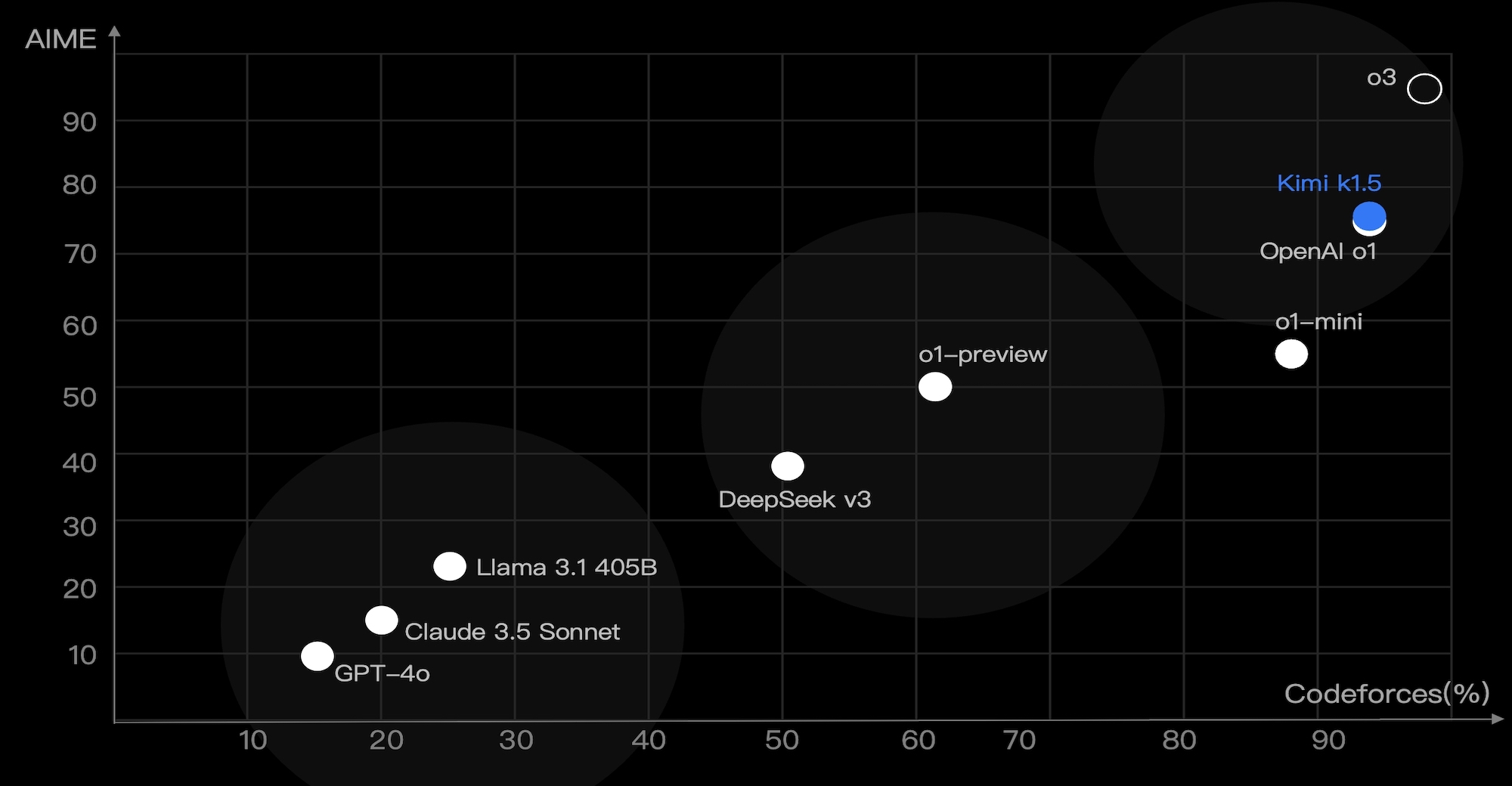

Kimi k1.5 sets a new benchmark in the AI arms race. Kimi k1.5, as aMultimodal Thinking Modelachieves what no company outside OpenAI managed:Performance matching the full powered O1 modelin reasoning benchmarks without the preview or the mini suffixes. This development marks a major leap forward in artificial intelligence and signals a new era for competition and innovation.

Raising The Bar for Long and short CoT Reasoning (19659001)

Kimi k1.5 excels in boththe Long CoT (Chain of Thoughts)as well asthe Short CoTtasks, proving versatility and technical prowess.

1. Long Context Scaling :

Kimi k1.5 is a long-chain reasoning system that can generate RL with contexts up to 128k. It ensures high performance by leveraging partial rollouts. The model can handle longer and complex tasks without sacrificing quality or speed.

2. Long2Short Optimization:

Kimi k1.5’s Long2Short technique uses minimal tokens in order to complete tasks as efficiently as possible. This approach improves the performance of Short CoT models (Chain of Thought), ensuring that they remain competitive, while consuming less computational resources.

Kimi k1.5 is not only competitive but alsooutperforms SOTAs like GPT-4o, Claude Sonnet 3.5 andvastly in math, coding and vision tasks. Performance margins can reachup to 550%.

This performance leap redefines the capabilities of compact, scalable AI in both long-term and short-term contexts.

A Technical Report Well Worth Reading

The Kimi team released a comprehensive Technical reportdetailing methods, challenges, & breakthroughs behind the Kimi k1.5. The report is a valuable resource, offering researchers and developers a range of information fromreinforcement-learning (RL) scaling toinfrastructure optimization.

The report highlights the simplicity of Kimi’s training method, which achieves exceptional results without complicated techniques such as Monte Carlo tree searches, value functions, and process reward models. Kimi focuses instead on effective RL scale and multimodal integration.

Abstract (

The quantity of high-quality data for training is a major limitation to scaling the compute. Scaling reinforcement learning (RL), a new approach to artificial intelligence, allows large language models to expand their data by rewarding exploration, and scale computation as well.

Despite the fact that previous work in this field has not been able to produce competitive results, we are now able to share our training practices. In this report, the training practices used to train Kimi k1.5 – our latest multimodal LLM with RL – are shared. Our approach is simple but effective, achieving the best reasoning performance on multiple benchmarks and modes–e.g.Pass@1 we love94% on Codeforces

and 74.9% MathVista–matching OpenAI’s O1. We also introducetechniques that use Long CoT strategiesto improve Short CoT model performance. This results in SOTA’s Short CoT performance — e.g.a 60.8% Pass@1 rate on AIME, 94.6% for MATH500 and 47.3% for LiveCodeBench () — outperforming GPT-4o, Claude Sonnet 3.5, and GPT-4o by significant margins.

Three Key Takeaways from

1.First of its kind Multimodal SOTA Model.Kimi k1.5 pushes boundaries in reinforcement learning using LLMs.

2.Simplicity wins: It achieves superior performances without complex methods such as Monte Carlo tree searches or value functions.

3.Long2Short Innovation :The use of Long CoT to optimize Short CoT sets new efficiency standards.

The full technical report can be found on Github.

Jim Fan, Senior Research Scientist at NVIDIA made comments about Kimi K1.5 on X

Kimi has a strong multimodal performance. Kimi shows strong multimodal performance (!)

Kimi paper contains a lot more details about the system design, including: RL infrastructures, hybrid clusters, code sandboxes, parallelism strategies, and learning details, such as: long contexts, CoT compressions, curriculums, sampling strategies, test case generation etc.”

Kimi.ai is founded by CMU PhD Zhilin Yao, the first author Transformer-XL. She has worked with luminaries such as Yann LeCun, (GLoMo), and Yoshua Bénigio, (HotpotQA). The core team includes inventors who have developed LLM technologies like RoPE, Group Normalization ShuffleNet and Relation Network.

Kimi.ai’s growth has been remarkable, with 36 million MAUs in its first year. It ranks among the top five AI Chatbot platforms in the world as of December 2024. The only ones ahead are ChatGPT (source: SimilarWeb), Google Gemini (source: SimilarWeb), Claude and Microsoft Copilot.

Kimi’s journey is a significant step in AI development. It inspires a more collaborative, innovative future for this field.

SEE ELSE: Kimi is testing the AI video generation function in grayscale