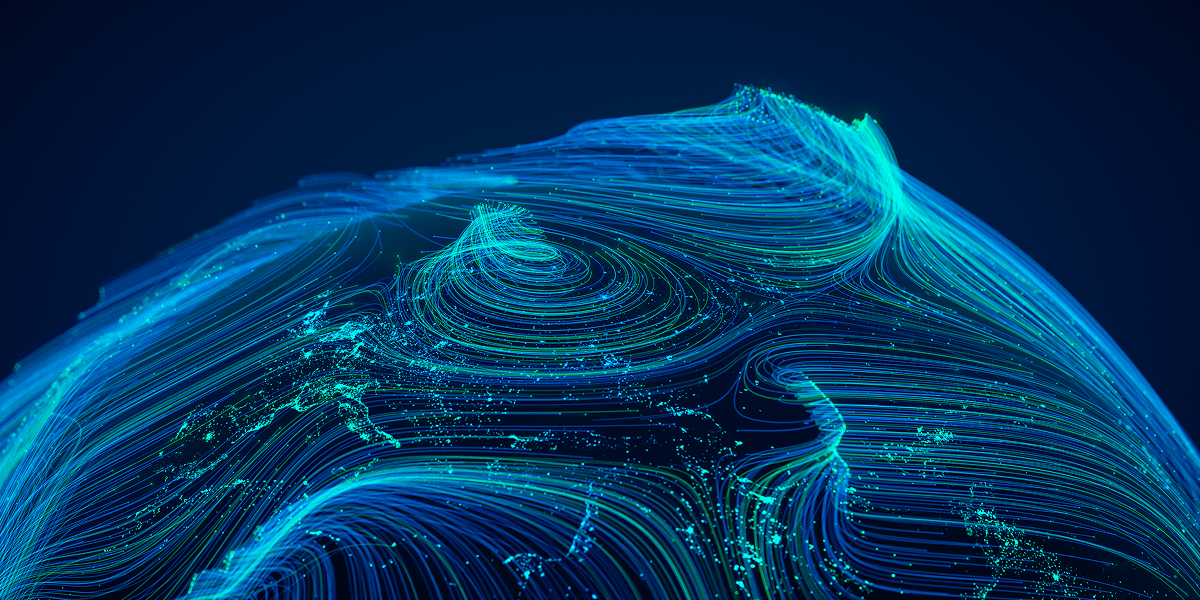

From reasoning agents to large language models, today’s AI tools have unprecedented computational demands. To become truly seamless and universal, a new paradigm in computing is needed. Trillion-parameter models and workloads running on devices, as well as swarms collaborating to complete a task, all require swarms to collaborate.

First of all, the technical progress in silicon design and hardware is crucial to pushing the limits of computing. Second, machine learning (ML), which is a recent development, allows AI systems to be more efficient with less computational demand. Finaly, the integration, orchestration and adoption of AI in applications, devices and systems are crucial for delivering tangible value and impact.

Silicone’s midlife crisis

AI evolved from classical ML, to deep learning, to generative AI. The most recent chapter of AI, which has made it mainstream, is based on two phases – training and inference – that are data- and energy-intensive, in terms computation, data movement and cooling. Moore’s Law, dictating that the number transistors on a microchip doubles every 2 years, is also being challenged. Reaching a physical and economical plateau

Over the last 40 years, digital technology and silicon chips have been pushing each other forward. Every step forward in processing ability frees the innovators’ imagination to imagine new products that require more power to run. In the AI age, this is happening at a rapid pace.

As more models are available, deployment at large scale puts the focus on inference and application of trained models to everyday use cases. This transition requires the appropriate hardware in order to handle inference efficiently. The central processing units (CPUs), which have been managing general computing tasks since decades, were unable to handle the computational demands of ML. Due to their parallel processing capabilities and high memory bandwidth, GPUs and other accelerator chips are now used to train complex neural networks.

But the CPUs are the most widely used and can be a companion to processors such as GPUs and Tensor Processing Units (TPUs). AI developers are also reluctant to adapt their software to fit specialized hardware. They prefer the consistency and ubiquity that CPUs provide. Chip designers are unlocking gains in performance through optimized software tools, adding novel processing and data types to serve ML workloads. They also integrate specialized units and acceleraters and advance silicon chip innovations, including custom silicon. AI is a useful tool for chip design. This creates a positive feedback cycle in which AI optimizes the chips it needs to run. Modern CPUs are well-suited to handle a variety of inference tasks thanks to these enhancements and the strong software support.

Disruptive technologies are emerging that go beyond silicon-based processors to meet the growing AI compute and information demands. Lightmatteris an example of a unicorn startup that introduced photonic computing solutions, which uses light for data transmission, to improve speed and energy efficiency. Quantum computingis another promising area of AI hardware. The integration of quantum computing and AI, while still years or decades away, could further transform fields such as drug discovery and genomics. Understanding models and paradigms.

The development of ML theories and networks architectures has significantly improved the efficiency and capability of AI models. Today, the industry is moving away from monolithic systems to agent-based models, which are characterized by smaller, more specialized models, that work together in order to complete tasks at the edge, on devices such as smartphones or modern cars. This allows them extract greater performance gains, such as faster model response time, with the same or less compute.

Researchers developed techniques such as few-shot learning to train AI models with smaller datasets and less training iterations. AI systems can learn to perform new tasks by using a small number of examples. This reduces the need for large datasets, and also lowers energy consumption. Quantization, a technique that reduces memory requirements by reducing precision selectively, is helping to reduce model sizes, without sacrificing performance.

New architectures like retrieval-augmented generator (RAG) have simplified data access both during training and inference, reducing computational costs and overhead. DeepSeek R1, a LLM open source, is an excellent example of how to extract more output using the same hardware. R1’s advanced reasoning capabilities were achieved by applying reinforcement learning techniques to novel contexts .

The integration heterogeneous computing systems, which combine different processing units such as CPUs and GPUs with specialized accelerators has further optimized AI model performances. This approach allows the efficient distribution workloads across various hardware components in order to optimize computational efficiency and throughput based on use cases.

Orchestrating AI.

As AI is a background capability that runs in the background for many tasks and workflows agents are taking control and making decisions. These include customer support and edge use cases where multiple agents coordinate tasks across devices.

As AI is increasingly used in everyday life, user experience becomes crucial for mass adoption. AI is a key enabler for improving technology interactions with users. Features like predictive text on touch keyboards and adaptive gearboxes are examples.

The Edge Processing is also accelerating AI’s diffusion into everyday applications by bringing computation capabilities closer to data sources. Smart cameras, autonomous cars, and wearable tech now process information locally in order to reduce latency, and improve efficiency. The development of energy-efficient chips and advances in CPU design have made it possible to perform complex AI tasks even on devices with limited resources. This shift towards heterogeneous computing enhances ambient intelligence where interconnected devices create responsive and adaptable environments that meet user needs.

Seamless AI requires industry collaboration through common standards, platforms, and frameworks. AI in the modern world brings new risks. By adding more complex software to consumer devices and personalizing the experience, hackers have a larger attack surface. This requires stronger security, both at the software and silicon level, including cryptographic safeguards, and transforms the trust model in compute environments.

According to a DarkTrace survey conducted in 2024more than 70% of respondents reported that AI-powered threats have a significant impact on their organizations. 60% said their organizations were not adequately prepared to defend themselves against AI-powered attacks.

Collaboration will be essential in establishing common frameworks. Universities provide foundational research; companies use findings to develop practical solutions; and governments set policies for ethical and responsible deployment. Anthropic, for example, is setting industry standards with frameworks such as the Model Context Protocol to unify how developers connect AI systems and data. Arm is also a leader in standards-based, open source initiatives including ecosystem development, which accelerates and harmonizes the chiplet marketby using common frameworks and standard. Arm optimizes open source AI models and frameworks for inference using the Arm compute platform without the need for customized tuning.

The technical decisions made today will determine how far AI can go in becoming a general purpose technology, similar to electricity or semiconductors. Hardware-agnostic platform, standards-based approach, and continued incremental improvement to critical workhorses such as CPUs all help deliver on the promise of AI, as a seamless, silent capability for both individuals and businesses. Open source contributions also allow a wider range of stakeholders to take part in AI advancements. By sharing tools, knowledge and resources, the community can foster innovation and ensure that AI benefits are available to everyone. Learn more about Arm’s approach to enabling AI anywhere

.

Insights is the custom content arm at MIT Technology Review that produced this content. It was not written or edited by MIT Technology Review. This content was written, designed and researched by humans, including writers, editors and analysts. This includes the creation of surveys and the collection of data for surveys. AI tools were only used in secondary production processes, which passed human review.