DeepSeek AI has announced the release of DeepSeek-Prover-V2, a groundbreaking open-source large language model specifically designed for formal theorem proving within the Lean 4 environment. This latest iteration builds upon previous work by introducing an innovative recursive theorem-proving pipeline, leveraging the power of DeepSeek-V3 to generate its own high-quality initialization data. The resulting model achieves state-of-the-art performance in neural theorem proving and is accompanied by the introduction of ProverBench, a new benchmark for evaluating mathematical reasoning capabilities.

Pioneering Cold-Start Reasoning Data Generation

A key innovation of DeepSeek-Prover-V2 lies in its unique cold-start training procedure. This process begins by prompting the powerful DeepSeek-V3 model to decompose complex mathematical theorems into a series of more manageable subgoals. Simultaneously, DeepSeek-V3 formalizes these high-level proof steps in Lean 4, effectively creating a structured sequence of sub-problems.

To handle the computationally intensive proof search for each subgoal, the researchers employed a smaller 7B parameter model. Once all the decomposed steps of a challenging problem are successfully proven, the complete step-by-step formal proof is paired with DeepSeek-V3’s corresponding chain-of-thought reasoning. This ingenious approach allows the model to learn from a synthesized dataset that integrates both informal, high-level mathematical reasoning and rigorous formal proofs, providing a strong cold start for subsequent reinforcement learning.

Reinforcement Learning for Enhanced Reasoning

Building upon the synthetic cold-start data, the DeepSeek team curated a selection of challenging problems that the 7B prover model couldn’t solve end-to-end, but for which all subgoals had been successfully addressed. By combining the formal proofs of these subgoals, a complete proof for the original problem is constructed. This formal proof is then linked with DeepSeek-V3’s chain-of-thought outlining the lemma decomposition, creating a unified training example of informal reasoning followed by formalization.

The prover model is then fine-tuned on this synthetic data, followed by a reinforcement learning stage. This stage utilizes binary correct-or-incorrect feedback as the reward signal, further refining the model’s ability to bridge the gap between informal mathematical intuition and the precise construction of formal proofs.

State-of-the-Art Performance

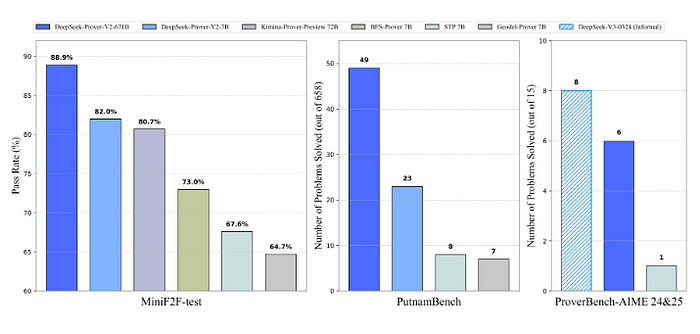

The culmination of this innovative training process is DeepSeek-Prover-V2–671B, a model boasting 671 billion parameters. This model has achieved remarkable results, demonstrating state-of-the-art performance in neural theorem proving. It reached an impressive 88.9% pass ratio on the MiniF2F-test and successfully solved 49 out of 658 problems from PutnamBench. The proofs generated by DeepSeek-Prover-V2 for the miniF2F dataset are publicly available for download, allowing for further scrutiny and analysis.

Introducing ProverBench: A New Standard for Evaluation

In addition to the model release, DeepSeek AI has introduced ProverBench, a new benchmark dataset comprising 325 problems. This benchmark is designed to offer a more comprehensive evaluation of mathematical reasoning capabilities across different levels of difficulty.

ProverBench includes 15 problems formalized from recent AIME (American Invitational Mathematics Examination) competitions (AIME 24 and 25), providing authentic challenges at the high-school competition level. The remaining 310 problems are drawn from curated textbook examples and educational tutorials, offering a diverse and pedagogically sound collection of formalized mathematical problems spanning various areas:

ProverBench aims to facilitate a more thorough assessment of neural theorem provers across both challenging competition problems and fundamental undergraduate-level mathematics.

Availability

DeepSeek AI is releasing DeepSeek-Prover-V2 in two model sizes to cater to different computational resources: a 7B parameter model and the larger 671B parameter model. DeepSeek-Prover-V2–671B is built upon the robust foundation of DeepSeek-V3-Base. The smaller DeepSeek-Prover-V2–7B is built upon DeepSeek-Prover-V1.5-Base and features an extended context length of up to 32K tokens, allowing it to process longer and more complex reasoning sequences.

The release of DeepSeek-Prover-V2 and the introduction of ProverBench mark a significant step forward in the field of neural theorem proving. By leveraging a recursive proof search pipeline and introducing a challenging new benchmark, DeepSeek AI is empowering the community to develop and evaluate more sophisticated and capable AI systems for formal mathematics.

Link:

The post first appeared on .