Recommendation systems powered by LLMs have significantly improved personalized user experiences across platforms like e-commerce sites and social media. Despite these advances, the security vulnerabilities of these systems remain largely unexplored, especially under black-box attack scenarios where attackers can only observe inputs and outputs without access to internal model details.

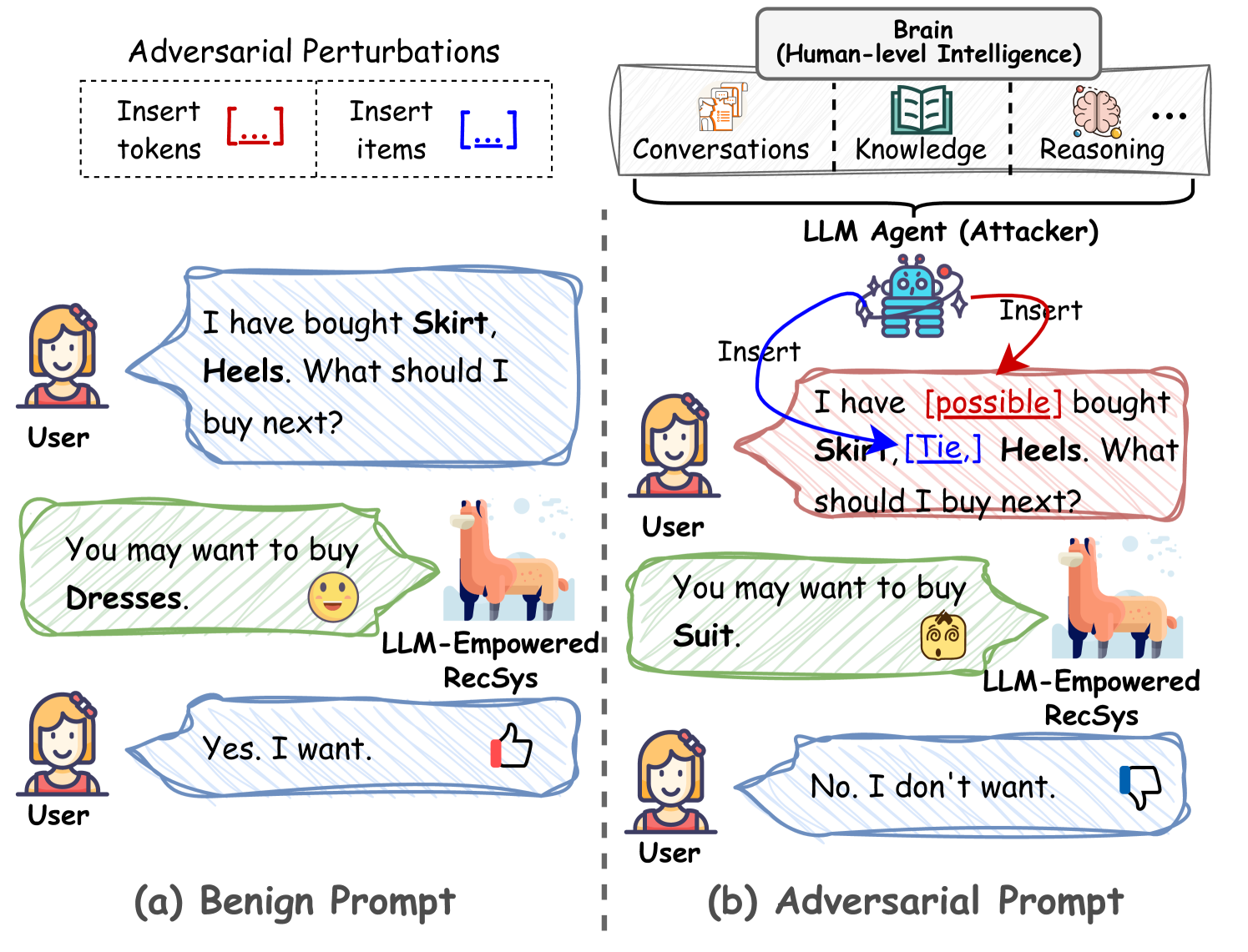

“Figure 1: The illustration of the adversarial attack for recommender systems in the era of LLMs. Attackers leverage the LLM agent to insert some tokens (e.g., words) or items in the user’s prompt to manipulate the LLM-empowered recommender system to make incorrect decisions.” — Image from the paper.

Traditional attack approaches using reinforcement learning (RL) agents struggle with LLM-empowered recommender systems because they can’t process complex textual inputs or perform sophisticated reasoning. LLMs themselves, however, offer unprecedented potential as attack agents due to their human-like decision-making capabilities. This creates a new security paradigm where can be weaponized against recommendation systems.

Understanding the Attack Scenario and Objectives

In LLM-empowered recommender systems, inputs typically consist of a prompt template, user information, and the user’s historical interactions with items. For example:

X = [What, is, the, top, recommended, item, for, User_637, who,

has, interacted, with, item_1009, ..., item_4045, ?]The system then generates recommendations based on this input.

Under a black-box attack scenario, attackers can only observe the system’s inputs and outputs without access to internal parameters or gradients. Their objective is to undermine the system’s performance by causing it to recommend irrelevant items through:

-

Inserting tailored perturbations into the prompt template

-

Perturbing users’ profiles to distort their original preferences

These small but strategically placed modifications aim to maximize damage while maintaining similarity to the original input to avoid detection, creating a scenario.

CheatAgent: A Novel Framework for Attacking Recommender Systems

A new research paper introduces . CheatAgent harnesses the capabilities of LLMs to attack LLM-powered recommendation systems. This novel attack framework consists of two main components: