Adi Robertson (19459013) is a senior tech editor who focuses on VR, online platforms and free expression. Adi has been covering video games, biohacking and more for The Verge, since 2011.

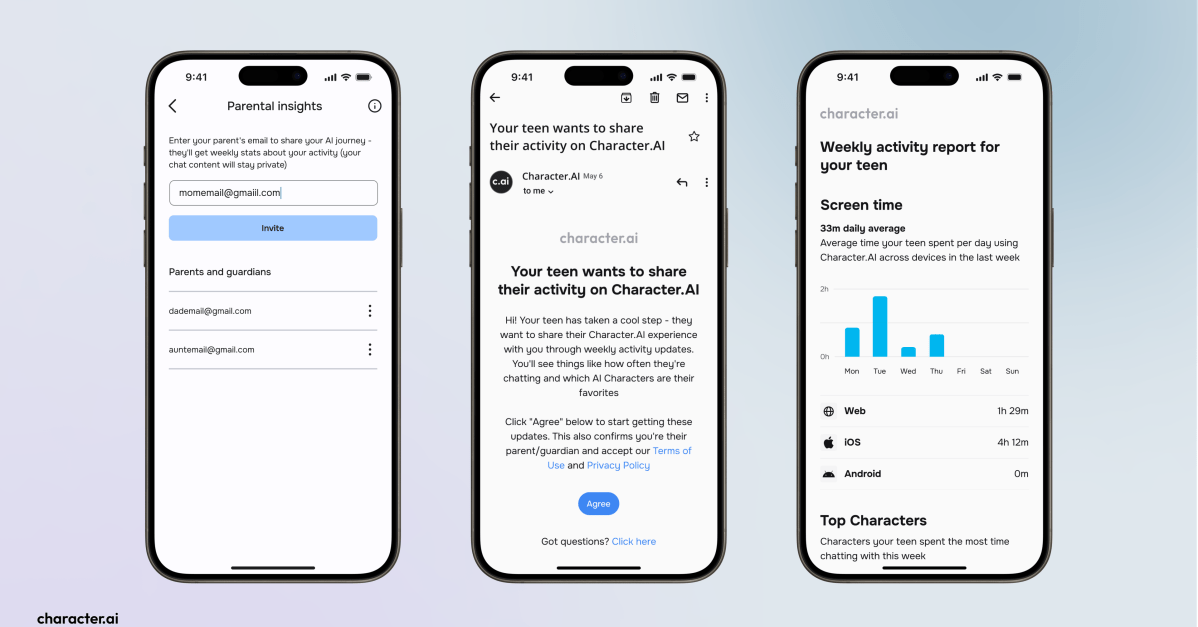

This report is optional, and minors can set it up in Character.AI settings. Parents do not need to have an account. The company notes that this is an overview, not a full log of teens’ activities. Therefore, the contents of chatbot conversation will not be shared. In most places, the platform does not allow children under 13 years old. In Europe, it is restricted to those under 16.

Since last year, Character.AI has introduced new features for its underage users. This coincides with concerns – and even legal complaints – about the service. The platform is popular among teenagers and allows users to customize chatbots they can interact with or share with the public. Multiple lawsuits claim that these bots offered inappropriately sexualized material or material that encouraged self-harm. Apple and Google, which hired Character.AI founders last year, also warned it about the app’s content.

According to the company, its system has been redesigned. Among other changes, they have moved users under 18 to a model that is trained to avoid “sensitive output” and added more visible notifications to remind users that bots are not real people. This is not the last step that the company will be asked to take, especially with the growing interest in AI regulation and child protection laws.