On February 10, ByteDance’s Doubao large AI team, in collaboration Beijing Jiaotong University and University of Science and Technology of China unveiled VideoWorlda video-generation model that is now open-sourced.

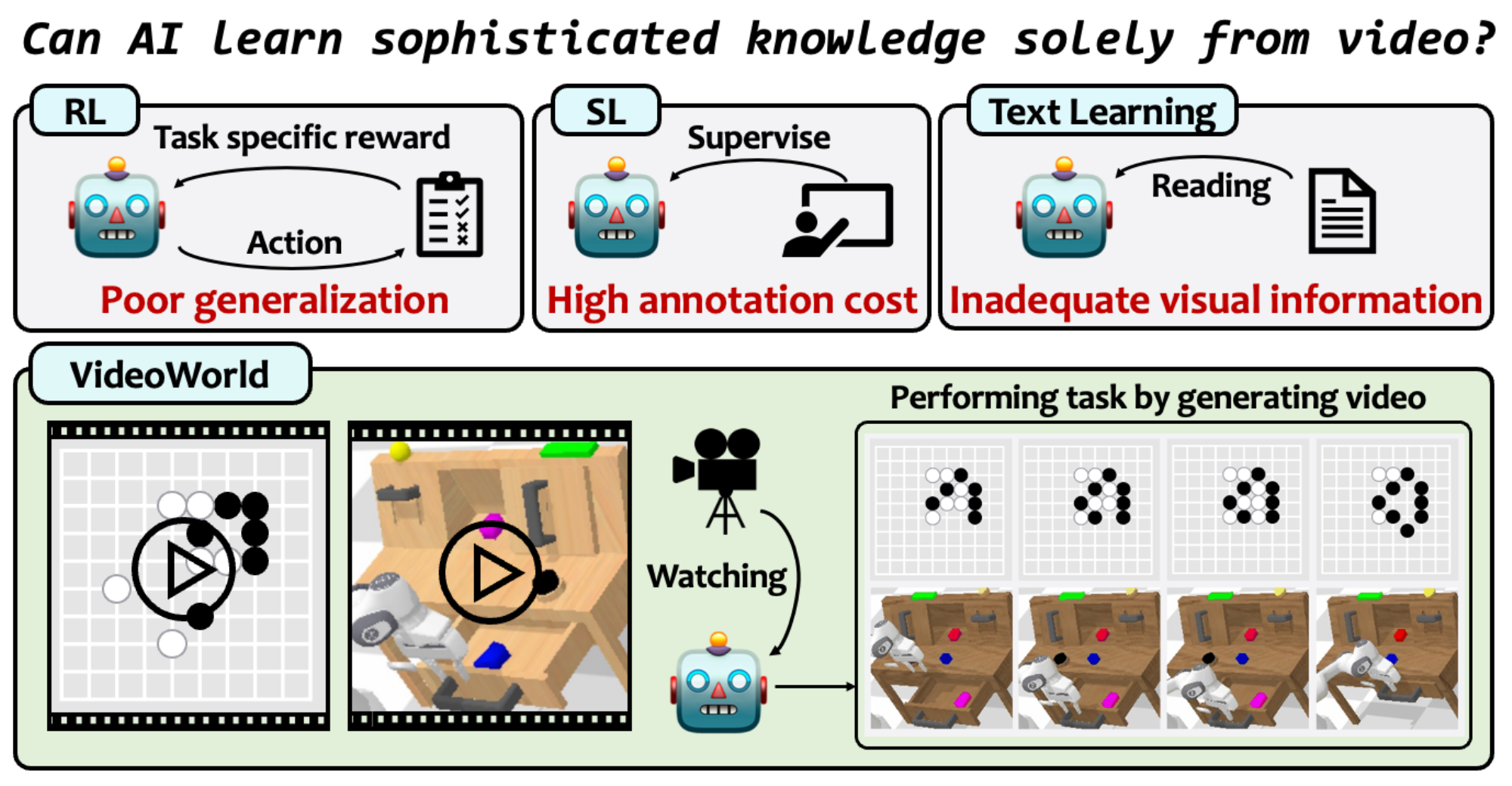

VideoWorld is a multimodal model that uses video data exclusively to develop reasoning, decision-making, and planning capabilities.

The team created two experimental environments to train VideoWorld: video-based go gameplay and robotic video simulator. The model is trained from an offline dataset consisting of video demonstrations. It uses a naive auto-regressive framework which combines a VQ VAE encoder-decoder with an autoregressive Transformer.

HeyGen introduces advanced motion control for virtual avatars

Video encoding typically requires hundreds or even thousands of discrete tokens in order to capture frame-level details. This results in knowledge being sparsely incorporated. VideoWorld has developed a Latent Dynamics Model to address this problem. The LDM compresses the visual changes between frames into compact latent representations. This improves knowledge extraction efficiency.

In robotic control, for example, sequential actions are coordinated in order to coordinate multi-step board changes. VideoWorld compresses these changes into compact embeddings to enhance policy representation and encode forward-planning advice.

VideoWorld, despite having only 300,000,000 parameters, has shown impressive performance. It reached the professional 5-dan in 9×9 go and executed robotic tasks in diverse environments.

AI’s visual-learning capabilities are expected to accelerate the development of new AI applications. Great Wall Securities’ research report highlights the ongoing improvements of multimodal AI models, including ByteDance’s Doubao AI and Kuaishou’s Kling AI models, in China. These models are enhancing video production with precise semantic understanding and consistent multi-shot composition. AI applications will iterate quickly as underlying AI capabilities improve, resulting in increased token usage across industries. Sign up for 5 articles free per month !