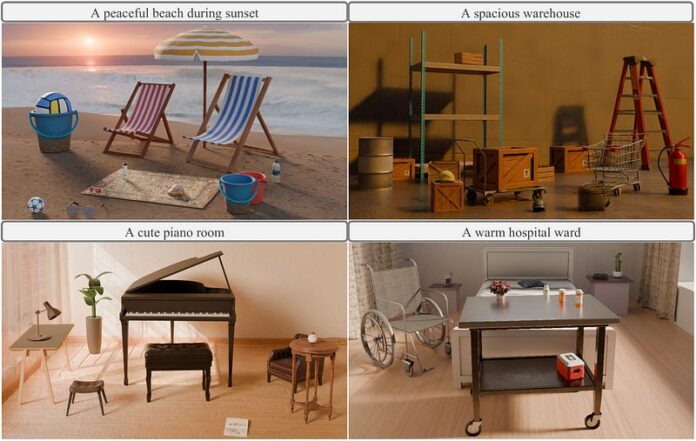

Generating interactive 3D scenes from text is essential for gaming, virtual reality, and embodied AI applications. Unlike single-geometry generation, interactive scene synthesis requires arranging objects in realistic layouts that preserve natural interactions, functional roles, and physical principles. For example, chairs should face tables for proper seating, while small items need appropriate placement inside cabinets or on shelves without penetration.

Current approaches face significant limitations. Learning-based methods rely on small-scale indoor datasets like 3D-FRONT, limiting scene diversity and layout complexity. While Large Language Models (LLMs) can leverage text-domain knowledge, they lack visual perception, resulting in unnatural object placements that fail to respect common sense spatial relationships.

addresses these challenges through a key insight: vision perception can bridge the spatial gap that LLMs lack. This training-free agentic framework integrates LLM-based scene planning with vision-guided layout refinement, creating diverse, realistic, and physically plausible 3D interactive scenes.

The problem with current scene generation approaches

Traditional interactive scene generation methods like manual design are labor-intensive and unscalable, while procedural approaches produce oversimplified scenes that fail to capture realistic spatial relationships.

Recent deep learning approaches using auto-regressive models and diffusion methods enable end-to-end 3D layout generation but rely on object-annotated datasets like 3D-FRONT. These datasets are small in scale, limited to indoor environments, and often contain collisions. They primarily model large furniture layouts while neglecting smaller objects and their functional interactions.

While LLMs expand scene diversity by leveraging common-sense knowledge from text, their lack of visual perception prevents accurate reproduction of real-world spatial relations. As shown in Figure 2, LLM-generated scenes often misorient objects (chairs facing cabinets instead of tables) and misplace them (cabinets against windows). Small objects are restricted to predefined locations (only on top of furniture rather than inside). This lack of realism disrupts object functionality and spatial coherence, making the scenes impractical for real-world usability.

have attempted to address some of these issues, but the fundamental challenge of combining language understanding with spatial awareness remains.

Scenethesis: A multi-agent framework overview

Scenethesis is a training-free agentic framework that integrates LLM-based scene planning with vision-guided spatial refinement. This approach leverages vision foundation models that encode compact spatial information and generate coherent scene distributions reflecting real-world layouts.

As shown in Figure 3, the framework consists of four key stages: