(19459027)

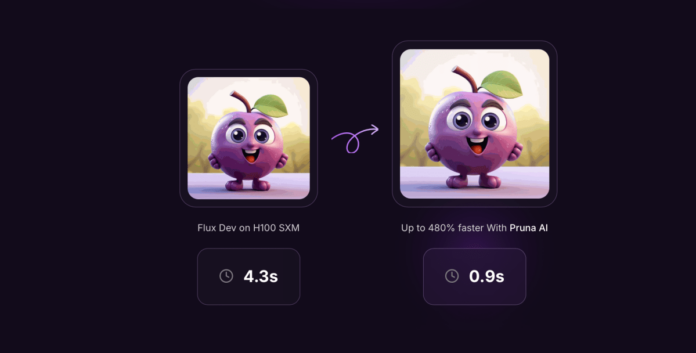

Pruna AI (19459030), a European startup working on compression algorithms in AI models, has released its optimization framework. Open sourcewill be held on Thursday. Pruna AI is creating a framework to apply efficiency methods such as caching and pruning, quantization and distillation to an AI model.

John Rachwan, Pruna AI’s CTO and co-founder, told TechCrunch that they also standardize the saving and loading of compressed models. They also apply combinations of these compression techniques and evaluate your compressed model once you have compressed it. Pruna AI framework can evaluate, in particular, if there is a significant quality loss and the performance gains you get after compressing a modeling.

If I were to use an analogy, we’re similar to how Hugging Face standardized transformers and diffusers – how to call them up, how to load them, etc. “We are doing the exact same thing, but with efficiency methods,” he continued.

The big AI labs are already using different compression methods. OpenAI, for example, has relied on distillation to produce faster versions of its flagship model.

OpenAI likely developed GPT-4 Turbo as a faster version. The Similarly, The Flux.1-schnell image generation model is a distilled form of the Flux.1 from Black Forest Labs.

The distillation technique is used to extract information from a large AI with a “teacher/student” model. Developers send requests and record the responses to a model of a teacher. Sometimes, answers are compared to a dataset in order to determine their accuracy. These outputs are used to train a student model that is trained to approximate a teacher’s behavior.

For big companies, they build these things in-house. What you can find on the open-source world is usually based upon single methods. Rachwan explained that, for example, one quantization method could be used for LLMs or one caching technique for diffusion models. “But there is no tool that aggregates them all, makes them easy to use, and combines them together.” This is the value that Pruna brings right now.

Pruna AI can support any type of model, including large language models, diffusion models, computer vision models and speech-to-text, but the company focuses on image and video models at this time.

Pruna AI has a number of existing users, including Scenario and PhotoRoom Pruna AI offers an enterprise edition with advanced optimization features including an optimization agent.

Rachwan said, “The most exciting feature we will release soon is a compression agent.” “Basically, it’s a model that you give to the agent, and you tell it: ‘I don’t want my accuracy to drop more than 2%, but I do want more speed.’ Then, the agent does its magic. It will return the best combination to you. You don’t need to do anything, as a developer.”

Pruna AI bills by the hour for its professional version. Rachwan explained that it’s “similar to renting a GPU through AWS or other cloud services.”

If your model is an important part of your AI infrastructure you will save a lot on inference if you use the optimized model. Pruna AI, for example, has reduced a Llama-model by eight times without a lot of loss using its compression framework. Pruna AI wants its customers to see its compression framework as a worthwhile investment.

Pruna AI recently raised $6.5 million in a seed funding round. Investors include EQT Ventures and Daphni. Motier Ventures and Kima Ventures are also involved.

Romain is a Senior Journalist at TechCrunch. He has written more than 3,000 articles about technology and tech startups, and has become a prominent voice in the European tech scene. He has a background in startups, privacy and security, blockchain, mobile, media, social, and fintech. He has twelve years’ experience at TechCrunch and is one of the familiar faces that cover Silicon Valley and the technology industry. His career began at TechCrunch in 2001, when he was just 21 years old. Many people in the Paris tech ecosystem regard him as the best tech journalist in the city. Romain is known for spotting important startups ahead of others. He was the first to cover N26 Revolut, DigitalOcean. He has covered large acquisitions by Apple, Microsoft, and Snap. Romain is a developer when he’s unable to write. He knows how the technology behind the tech works. He has a thorough understanding of the history of the computer industry over the last 50 years. He is able to make the connection between the impact of innovations on our society and their origin. Romain graduated from Emlyon Business School – a leading French school that specializes in entrepreneurship. He has worked with several non-profits, including StartHer, which promotes the empowerment of women through technology, and Techfugees.

View Bio