Serving technology enthusiasts for more than 25 years.

TechSpot is the place to go for tech advice and analysis.

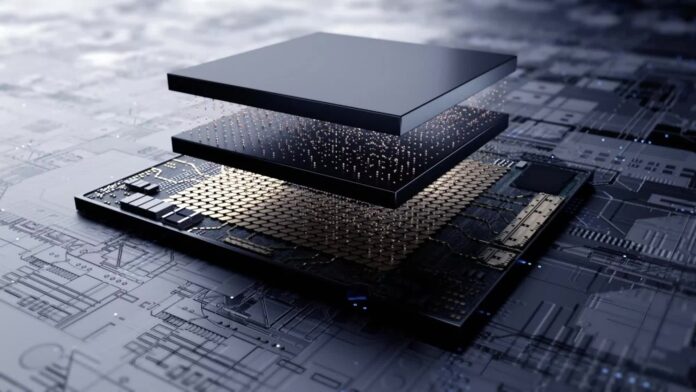

Recap : AI accelerator racing is driving rapid innovation for high-bandwidth memories. Memory giants Samsung, SK Hynix and Micron showcased their next-generation HBM4e and HBM4 solutions at this year’s GTC.

While the data center GPUs are moving to HBM3e at the moment, the memory roadmaps revealed by Nvidia GTC clearly show that HBM4 is the next step. Computerbase was present at the event and noted this new standard. enables some serious improvements in density and bandwidth over HBM3.

SK Hynix demonstrated its first 48GB stack of HBM4 chips, composed of 16 layers each of 3GB running at 8Gbps. Samsung and Micron also had 16-high HBM4 demonstrations. Samsung claimed that speeds would eventually reach 9.2Gbps in this generation. HBM4 products that launch in 2026 will likely feature 36GB stacks of 12 high. Micron claims that its HBM4 will increase performance by more than 50% compared to HBM3e.

However, memory makers are already looking beyond HBM4 to HBM4e and staggering capacity points. Samsung’s roadmap calls for 32Gb per layer DRAM, enabling 48GB and even 64GB per stack with data rates between 9.2-10Gbps. SK Hynix hinted at 20 or more layer stacks, allowing for up to 64GB capacities using their 3GB chips on HBM4e.

The high density is critical for Nvidia’s upcoming Rubin GPUs that are aimed at AI-training. Rubin Ultra, which is due to arrive in 2027, will use 16 stacks HBM4e memory for a massive 1TB per GPU. Nvidia claims Rubin Ultra, with four chiplets in a package and a bandwidth of 4.6PB/s, will enable a total of 365TB memory for the NVL576.

These numbers are impressive but they come with an ultra-premium tag. VideoCardz reports that consumer graphics cards are unlikely to be available. HBM variants are not expected to be adopted anytime soon.

HBM4 and HBM4e represent a crucial bridge for continued AI performance scaling. If memory makers can deliver their aggressive density- and bandwidth-roadmaps over the next couple of years, this will boost data hungry AI workloads. Nvidia is counting on it.

Image Credit: ComputerBase.