In this tutorial, we’ll harness ’s secure Python execution as the cornerstone of a powerful, tool-augmented AI agent in Google Colab. Beginning with seamless API key management, through Colab secrets, environment variables, or hidden prompts, we’ll configure your Riza credentials to enable sandboxed, audit-ready code execution. We’ll integrate Riza’s ExecPython tool into a LangChain agent alongside Google’s Gemini generative model, define an AdvancedCallbackHandler that captures both tool invocations and Riza execution logs, and build custom utilities for complex math and in-depth text analysis.

%pip install --upgrade --quiet langchain-community langchain-google-genai rizaio python-dotenv

import os

from typing import Dict, Any, List

from datetime import datetime

import json

import getpass

from google.colab import userdataWe will install and upgrade the core libraries, LangChain Community extensions, Google Gemini integration, Riza’s secure execution package, and dotenv support, quietly in Colab. We then import standard utilities (e.g., os, datetime, json), typing annotations, secure input via getpass, and Colab’s user data API to manage environment variables and user secrets seamlessly.

def setup_api_keys():

"""Set up API keys using multiple secure methods."""

try:

os.environ['GOOGLE_API_KEY'] = userdata.get('GOOGLE_API_KEY')

os.environ['RIZA_API_KEY'] = userdata.get('RIZA_API_KEY')

print(" API keys loaded from Colab secrets")

return True

except:

pass

if os.getenv('GOOGLE_API_KEY') and os.getenv('RIZA_API_KEY'):

print("

API keys loaded from Colab secrets")

return True

except:

pass

if os.getenv('GOOGLE_API_KEY') and os.getenv('RIZA_API_KEY'):

print(" API keys found in environment")

return True

try:

if not os.getenv('GOOGLE_API_KEY'):

google_key = getpass.getpass("

API keys found in environment")

return True

try:

if not os.getenv('GOOGLE_API_KEY'):

google_key = getpass.getpass(" Enter your Google Gemini API key: ")

os.environ['GOOGLE_API_KEY'] = google_key

if not os.getenv('RIZA_API_KEY'):

riza_key = getpass.getpass("

Enter your Google Gemini API key: ")

os.environ['GOOGLE_API_KEY'] = google_key

if not os.getenv('RIZA_API_KEY'):

riza_key = getpass.getpass(" Enter your Riza API key: ")

os.environ['RIZA_API_KEY'] = riza_key

print("

Enter your Riza API key: ")

os.environ['RIZA_API_KEY'] = riza_key

print(" API keys set securely via input")

return True

except:

print("

API keys set securely via input")

return True

except:

print(" Failed to set API keys")

return False

if not setup_api_keys():

print("

Failed to set API keys")

return False

if not setup_api_keys():

print(" Please set up your API keys using one of these methods:")

print(" 1. Colab Secrets: Go to

Please set up your API keys using one of these methods:")

print(" 1. Colab Secrets: Go to  in left panel, add GOOGLE_API_KEY and RIZA_API_KEY")

print(" 2. Environment: Set GOOGLE_API_KEY and RIZA_API_KEY before running")

print(" 3. Manual input: Run the cell and enter keys when prompted")

exit()

in left panel, add GOOGLE_API_KEY and RIZA_API_KEY")

print(" 2. Environment: Set GOOGLE_API_KEY and RIZA_API_KEY before running")

print(" 3. Manual input: Run the cell and enter keys when prompted")

exit()The above cell defines a setup_api_keys() function that securely retrieves your Google Gemini and Riza API keys by first attempting to load them from Colab secrets, then falling back to existing environment variables, and finally prompting you to enter them via hidden input if needed. If none of these methods succeed, it prints instructions on how to provide your keys and exits the notebook.

from langchain_community.tools.riza.command import ExecPython

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain.agents import AgentExecutor, create_tool_calling_agent

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.messages import HumanMessage, AIMessage

from langchain.memory import ConversationBufferWindowMemory

from langchain.tools import Tool

from langchain.callbacks.base import BaseCallbackHandlerWe import Riza’s ExecPython tool alongside LangChain’s core components for building a tool‐calling agent, namely the Gemini LLM wrapper (ChatGoogleGenerativeAI), the agent executor and creation functions (AgentExecutor, create_tool_calling_agent), the prompt and message templates, conversation memory buffer, generic Tool wrapper, and the base callback handler for logging and monitoring agent actions. These building blocks let you assemble, configure, and track a memory-enabled, multi-tool AI agent in Colab.

class AdvancedCallbackHandler(BaseCallbackHandler):

"""Enhanced callback handler for detailed logging and metrics."""

def __init__(self):

self.execution_log = []

self.start_time = None

self.token_count = 0

def on_agent_action(self, action, **kwargs):

timestamp = datetime.now().strftime("%H:%M:%S")

self.execution_log.append({

"timestamp": timestamp,

"action": action.tool,

"input": str(action.tool_input)[:100] + "..." if len(str(action.tool_input)) > 100 else str(action.tool_input)

})

print(f" [{timestamp}] Using tool: {action.tool}")

def on_agent_finish(self, finish, **kwargs):

timestamp = datetime.now().strftime("%H:%M:%S")

print(f"

[{timestamp}] Using tool: {action.tool}")

def on_agent_finish(self, finish, **kwargs):

timestamp = datetime.now().strftime("%H:%M:%S")

print(f" [{timestamp}] Agent completed successfully")

def get_execution_summary(self):

return {

"total_actions": len(self.execution_log),

"execution_log": self.execution_log

}

class MathTool:

"""Advanced mathematical operations tool."""

@staticmethod

def complex_calculation(expression: str) -> str:

"""Evaluate complex mathematical expressions safely."""

try:

import math

import numpy as np

safe_dict = {

"__builtins__": {},

"abs": abs, "round": round, "min": min, "max": max,

"sum": sum, "len": len, "pow": pow,

"math": math, "np": np,

"sin": math.sin, "cos": math.cos, "tan": math.tan,

"log": math.log, "sqrt": math.sqrt, "pi": math.pi, "e": math.e

}

result = eval(expression, safe_dict)

return f"Result: {result}"

except Exception as e:

return f"Math Error: {str(e)}"

class TextAnalyzer:

"""Advanced text analysis tool."""

@staticmethod

def analyze_text(text: str) -> str:

"""Perform comprehensive text analysis."""

try:

char_freq = {}

for char in text.lower():

if char.isalpha():

char_freq[char] = char_freq.get(char, 0) + 1

words = text.split()

word_count = len(words)

avg_word_length = sum(len(word) for word in words) / max(word_count, 1)

specific_chars = {}

for char in set(text.lower()):

if char.isalpha():

specific_chars[char] = text.lower().count(char)

analysis = {

"total_characters": len(text),

"total_words": word_count,

"average_word_length": round(avg_word_length, 2),

"character_frequencies": dict(sorted(char_freq.items(), key=lambda x: x[1], reverse=True)[:10]),

"specific_character_counts": specific_chars

}

return json.dumps(analysis, indent=2)

except Exception as e:

return f"Analysis Error: {str(e)}"

[{timestamp}] Agent completed successfully")

def get_execution_summary(self):

return {

"total_actions": len(self.execution_log),

"execution_log": self.execution_log

}

class MathTool:

"""Advanced mathematical operations tool."""

@staticmethod

def complex_calculation(expression: str) -> str:

"""Evaluate complex mathematical expressions safely."""

try:

import math

import numpy as np

safe_dict = {

"__builtins__": {},

"abs": abs, "round": round, "min": min, "max": max,

"sum": sum, "len": len, "pow": pow,

"math": math, "np": np,

"sin": math.sin, "cos": math.cos, "tan": math.tan,

"log": math.log, "sqrt": math.sqrt, "pi": math.pi, "e": math.e

}

result = eval(expression, safe_dict)

return f"Result: {result}"

except Exception as e:

return f"Math Error: {str(e)}"

class TextAnalyzer:

"""Advanced text analysis tool."""

@staticmethod

def analyze_text(text: str) -> str:

"""Perform comprehensive text analysis."""

try:

char_freq = {}

for char in text.lower():

if char.isalpha():

char_freq[char] = char_freq.get(char, 0) + 1

words = text.split()

word_count = len(words)

avg_word_length = sum(len(word) for word in words) / max(word_count, 1)

specific_chars = {}

for char in set(text.lower()):

if char.isalpha():

specific_chars[char] = text.lower().count(char)

analysis = {

"total_characters": len(text),

"total_words": word_count,

"average_word_length": round(avg_word_length, 2),

"character_frequencies": dict(sorted(char_freq.items(), key=lambda x: x[1], reverse=True)[:10]),

"specific_character_counts": specific_chars

}

return json.dumps(analysis, indent=2)

except Exception as e:

return f"Analysis Error: {str(e)}"Above cell brings together three essential pieces: an AdvancedCallbackHandler that captures every tool invocation with a timestamped log and can summarize the total actions taken; a MathTool class that safely evaluates complex mathematical expressions in a restricted environment to prevent unwanted operations; and a TextAnalyzer class that computes detailed text statistics, such as character frequencies, word counts, and average word length, and returns the results as formatted JSON.

def validate_api_keys():

"""Validate API keys before creating agents."""

try:

test_llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0

)

test_llm.invoke("test")

print(" Gemini API key validated")

test_tool = ExecPython()

print("

Gemini API key validated")

test_tool = ExecPython()

print(" Riza API key validated")

return True

except Exception as e:

print(f"

Riza API key validated")

return True

except Exception as e:

print(f" API key validation failed: {str(e)}")

print("Please check your API keys and try again")

return False

if not validate_api_keys():

exit()

python_tool = ExecPython()

math_tool = Tool(

name="advanced_math",

description="Perform complex mathematical calculations and evaluations",

func=MathTool.complex_calculation

)

text_analyzer_tool = Tool(

name="text_analyzer",

description="Analyze text for character frequencies, word statistics, and specific character counts",

func=TextAnalyzer.analyze_text

)

tools = [python_tool, math_tool, text_analyzer_tool]

try:

llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.1,

max_tokens=2048,

top_p=0.8,

top_k=40

)

print("

API key validation failed: {str(e)}")

print("Please check your API keys and try again")

return False

if not validate_api_keys():

exit()

python_tool = ExecPython()

math_tool = Tool(

name="advanced_math",

description="Perform complex mathematical calculations and evaluations",

func=MathTool.complex_calculation

)

text_analyzer_tool = Tool(

name="text_analyzer",

description="Analyze text for character frequencies, word statistics, and specific character counts",

func=TextAnalyzer.analyze_text

)

tools = [python_tool, math_tool, text_analyzer_tool]

try:

llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.1,

max_tokens=2048,

top_p=0.8,

top_k=40

)

print(" Gemini model initialized successfully")

except Exception as e:

print(f"

Gemini model initialized successfully")

except Exception as e:

print(f" Gemini Pro failed, falling back to Flash: {e}")

llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.1,

max_tokens=2048

)

Gemini Pro failed, falling back to Flash: {e}")

llm = ChatGoogleGenerativeAI(

model="gemini-1.5-flash",

temperature=0.1,

max_tokens=2048

)

In this cell, we first define and run validate_api_keys() to ensure that both the Gemini and Riza credentials work, attempting a dummy LLM call and instantiating the Riza ExecPython tool. We exit the notebook if validation fails. We then instantiate python_tool for secure code execution, wrap our MathTool and TextAnalyzer methods into LangChain Tool objects, and collect them into the tools list. Finally, we initialize the Gemini model with custom settings (temperature, max_tokens, top_p, top_k), and if the “Pro” configuration fails, we gracefully fall back to the lighter “Flash” variant.

prompt_template = ChatPromptTemplate.from_messages([

("system", """You are an advanced AI assistant with access to powerful tools.

Key capabilities:

- Python code execution for complex computations

- Advanced mathematical operations

- Text analysis and character counting

- Problem decomposition and step-by-step reasoning

Instructions:

1. Always break down complex problems into smaller steps

2. Use the most appropriate tool for each task

3. Verify your results when possible

4. Provide clear explanations of your reasoning

5. For text analysis questions (like counting characters), use the text_analyzer tool first, then verify with Python if needed

Be precise, thorough, and helpful."""),

("human", "{input}"),

("placeholder", "{agent_scratchpad}"),

])

memory = ConversationBufferWindowMemory(

k=5,

return_messages=True,

memory_key="chat_history"

)

callback_handler = AdvancedCallbackHandler()

agent = create_tool_calling_agent(llm, tools, prompt_template)

agent_executor = AgentExecutor(

agent=agent,

tools=tools,

verbose=True,

memory=memory,

callbacks=[callback_handler],

max_iterations=10,

early_stopping_method="generate"

)

This cell constructs the agent’s “brain” and workflow: it defines a structured ChatPromptTemplate that instructs the system on its toolset and reasoning style, sets up a sliding-window conversation memory to retain the last five exchanges, and instantiates the AdvancedCallbackHandler for real-time logging. It then creates a tool‐calling agent by binding the Gemini LLM, custom tools, and prompt template, and wraps it in an AgentExecutor that manages execution (up to ten steps), leverages memory for context, streams verbose output, and halts cleanly once the agent generates a final response.

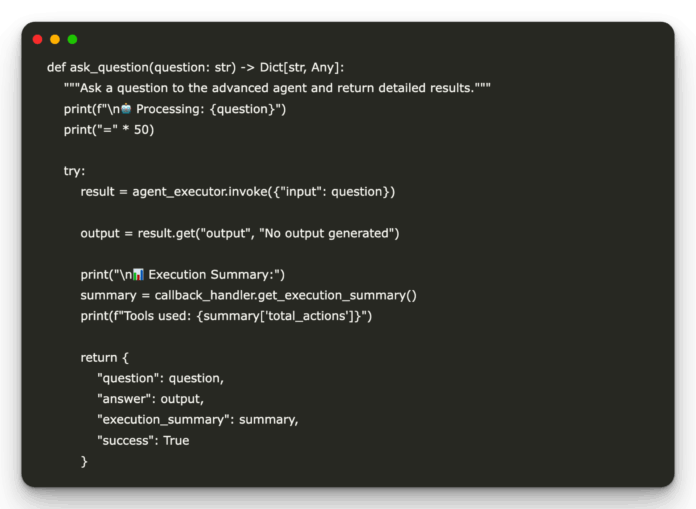

def ask_question(question: str) -> Dict[str, Any]:

"""Ask a question to the advanced agent and return detailed results."""

print(f"n Processing: {question}")

print("=" * 50)

try:

result = agent_executor.invoke({"input": question})

output = result.get("output", "No output generated")

print("n

Processing: {question}")

print("=" * 50)

try:

result = agent_executor.invoke({"input": question})

output = result.get("output", "No output generated")

print("n Execution Summary:")

summary = callback_handler.get_execution_summary()

print(f"Tools used: {summary['total_actions']}")

return {

"question": question,

"answer": output,

"execution_summary": summary,

"success": True

}

except Exception as e:

print(f"

Execution Summary:")

summary = callback_handler.get_execution_summary()

print(f"Tools used: {summary['total_actions']}")

return {

"question": question,

"answer": output,

"execution_summary": summary,

"success": True

}

except Exception as e:

print(f" Error: {str(e)}")

return {

"question": question,

"error": str(e),

"success": False

}

test_questions = [

"How many r's are in strawberry?",

"Calculate the compound interest on $1000 at 5% for 3 years",

"Analyze the word frequency in the sentence: 'The quick brown fox jumps over the lazy dog'",

"What's the fibonacci sequence up to the 10th number?"

]

print("

Error: {str(e)}")

return {

"question": question,

"error": str(e),

"success": False

}

test_questions = [

"How many r's are in strawberry?",

"Calculate the compound interest on $1000 at 5% for 3 years",

"Analyze the word frequency in the sentence: 'The quick brown fox jumps over the lazy dog'",

"What's the fibonacci sequence up to the 10th number?"

]

print(" Advanced Gemini Agent with Riza - Ready!")

print("

Advanced Gemini Agent with Riza - Ready!")

print(" API keys configured securely")

print("Testing with sample questions...n")

results = []

for question in test_questions:

result = ask_question(question)

results.append(result)

print("n" + "="*80 + "n")

print("

API keys configured securely")

print("Testing with sample questions...n")

results = []

for question in test_questions:

result = ask_question(question)

results.append(result)

print("n" + "="*80 + "n")

print(" FINAL SUMMARY:")

successful = sum(1 for r in results if r["success"])

print(f"Successfully processed: {successful}/{len(results)} questions")

FINAL SUMMARY:")

successful = sum(1 for r in results if r["success"])

print(f"Successfully processed: {successful}/{len(results)} questions")Finally, we define a helper function, ask_question(), that sends a user query to the agent executor, prints the question header, captures the agent’s response (or error), and then outputs a brief execution summary (showing how many tool calls were made). It then supplies a list of sample questions, covering counting characters, computing compound interest, analyzing word frequency, and generating a Fibonacci sequence, and iterates through them, invoking the agent on each and collecting the results. After running all tests, it prints a concise “FINAL SUMMARY” indicating how many queries were processed successfully, confirming that your Advanced Gemini + Riza agent is up and running in Colab.

In conclusion, by centering the architecture on Riza’s secure execution environment, we’ve created an AI agent that generates insightful responses via Gemini while also running arbitrary Python code in a fully sandboxed, monitored context. The integration of Riza’s ExecPython tool ensures that every computation, from advanced numerical routines to dynamic text analyses, is executed with rigorous security and transparency. With LangChain orchestrating tool calls and a memory buffer maintaining context, we now have a modular framework ready for real-world tasks such as automated data processing, research prototyping, or educational demos.

Check out the . All credit for this research goes to the researchers of this project. Also, feel free to follow us on and don’t forget to join our and Subscribe to .

API keys loaded from Colab secrets")

return True

except:

pass

if os.getenv('GOOGLE_API_KEY') and os.getenv('RIZA_API_KEY'):

print("

API keys loaded from Colab secrets")

return True

except:

pass

if os.getenv('GOOGLE_API_KEY') and os.getenv('RIZA_API_KEY'):

print(" Enter your Google Gemini API key: ")

os.environ['GOOGLE_API_KEY'] = google_key

if not os.getenv('RIZA_API_KEY'):

riza_key = getpass.getpass("

Enter your Google Gemini API key: ")

os.environ['GOOGLE_API_KEY'] = google_key

if not os.getenv('RIZA_API_KEY'):

riza_key = getpass.getpass(" Failed to set API keys")

return False

if not setup_api_keys():

print("

Failed to set API keys")

return False

if not setup_api_keys():

print(" Please set up your API keys using one of these methods:")

print(" 1. Colab Secrets: Go to

Please set up your API keys using one of these methods:")

print(" 1. Colab Secrets: Go to  [{timestamp}] Using tool: {action.tool}")

def on_agent_finish(self, finish, **kwargs):

timestamp = datetime.now().strftime("%H:%M:%S")

print(f"

[{timestamp}] Using tool: {action.tool}")

def on_agent_finish(self, finish, **kwargs):

timestamp = datetime.now().strftime("%H:%M:%S")

print(f" Processing: {question}")

print("=" * 50)

try:

result = agent_executor.invoke({"input": question})

output = result.get("output", "No output generated")

print("n

Processing: {question}")

print("=" * 50)

try:

result = agent_executor.invoke({"input": question})

output = result.get("output", "No output generated")

print("n Execution Summary:")

summary = callback_handler.get_execution_summary()

print(f"Tools used: {summary['total_actions']}")

return {

"question": question,

"answer": output,

"execution_summary": summary,

"success": True

}

except Exception as e:

print(f"

Execution Summary:")

summary = callback_handler.get_execution_summary()

print(f"Tools used: {summary['total_actions']}")

return {

"question": question,

"answer": output,

"execution_summary": summary,

"success": True

}

except Exception as e:

print(f" Advanced Gemini Agent with Riza - Ready!")

print("

Advanced Gemini Agent with Riza - Ready!")

print(" API keys configured securely")

print("Testing with sample questions...n")

results = []

for question in test_questions:

result = ask_question(question)

results.append(result)

print("n" + "="*80 + "n")

print("

API keys configured securely")

print("Testing with sample questions...n")

results = []

for question in test_questions:

result = ask_question(question)

results.append(result)

print("n" + "="*80 + "n")

print(" FINAL SUMMARY:")

successful = sum(1 for r in results if r["success"])

print(f"Successfully processed: {successful}/{len(results)} questions")

FINAL SUMMARY:")

successful = sum(1 for r in results if r["success"])

print(f"Successfully processed: {successful}/{len(results)} questions")