On Wednesday, web infrastructure provider Cloudflare announced a new feature called “AI Labyrinth” that aims to combat unauthorized AI data scraping by serving fake AI-generated content to bots. The tool will try to stop AI companies from crawling websites without permission in order to collect data for large language model that power AI assistants such as ChatGPT.

Cloudflare was founded in 2009 and is best known for its ability to protect websites. Provides infrastructure, security and protection services for websites. This includes protection against distributed denial of service attacks and other malicious traffic.

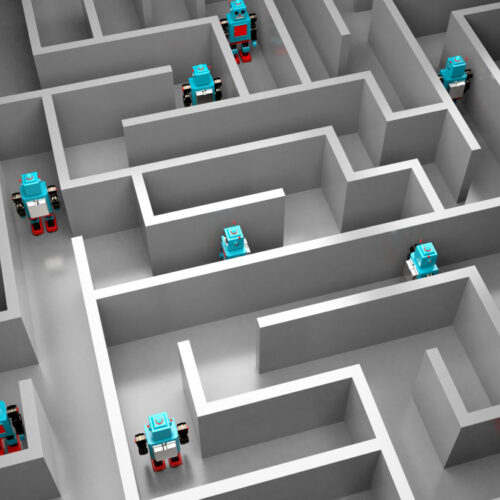

Cloudflare’s new system, instead of blocking bots, lures them to “maze” a set of realistic-looking, but irrelevant pages. This wastes the crawler’s computing resources. This is a significant departure from the traditional block-and-defend approach used by most website security services. Cloudflare claims that blocking bots can backfire because it alerts crawler operators to the fact that they have been detected. Cloudflare writes

“When we detect unauthorized crawling, rather than blocking the request, we will link to a series of AI-generated pages that are convincing enough to entice a crawler to traverse them,” . “But while real looking, this content is not actually the content of the site we are protecting, so the crawler wastes time and resources.”

According to the company, the content served to bots has been deliberately chosen to be irrelevant to the website that is being crawled. However, it is carefully sourced and generated using real scientific information, such as neutral information on biology, physics or mathematics, to avoid spreading misinformation. Cloudflare uses its Workers AI platform, a commercial platform for AI tasks, to create this content.

Cloudflare created the trap pages and links so that they are invisible and inaccessible for regular visitors. This way, people browsing the internet won’t accidentally run into them.

An improved honeypot

AI Labyrinth is what Cloudflare refers to as a “next-generation honeypot.” Traditionally, honeypots were invisible links that humans couldn’t see. However, bots that parse HTML code could follow them. Cloudflare claims that modern bots are adept at spotting simple traps and require more sophisticated deception. The false links are crafted with meta directives that prevent search engine indexing, while still being attractive to data-scraping robots.