Technology

Featured

OpenBMB Releases MiniCPM4: Ultra-Efficient Language Models for Edge Devices with Sparse...

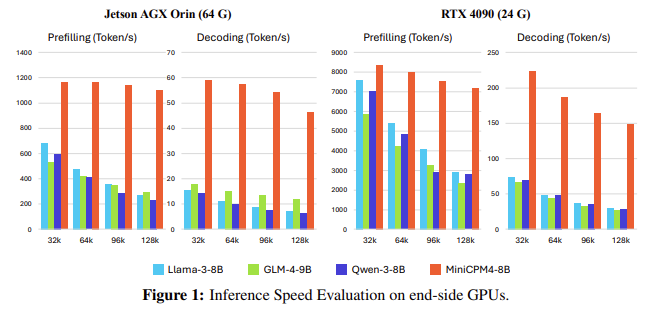

The Need for Efficient On-Device Language Models

Large language models have become integral to AI systems, enabling tasks like multilingual translation, virtual assistance, and automated reasoning through transformer-based architectures. While highly capable, these models are typically large, requiring powerful cloud infrastructure for training and inference. This reliance leads to latency,...