Technology

Featured

Is Automated Hallucination Detection in LLMs Feasible? A Theoretical and Empirical...

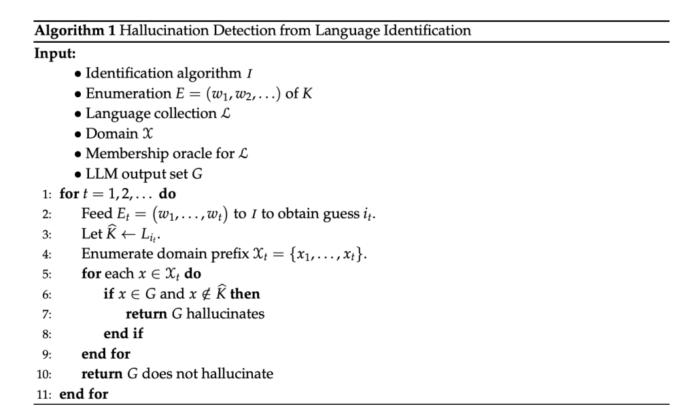

Recent advancements in LLMs have significantly improved natural language understanding, reasoning, and generation. These models now excel at diverse tasks like mathematical problem-solving and generating contextually appropriate text. However, a persistent challenge remains: LLMs often generate hallucinations—fluent but factually incorrect responses. These hallucinations undermine the reliability of LLMs, especially...