News

Featured

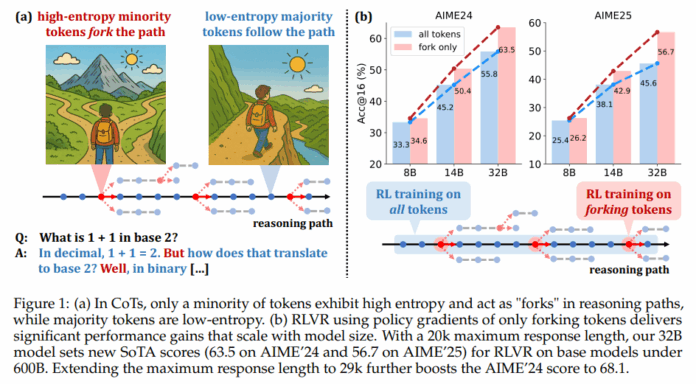

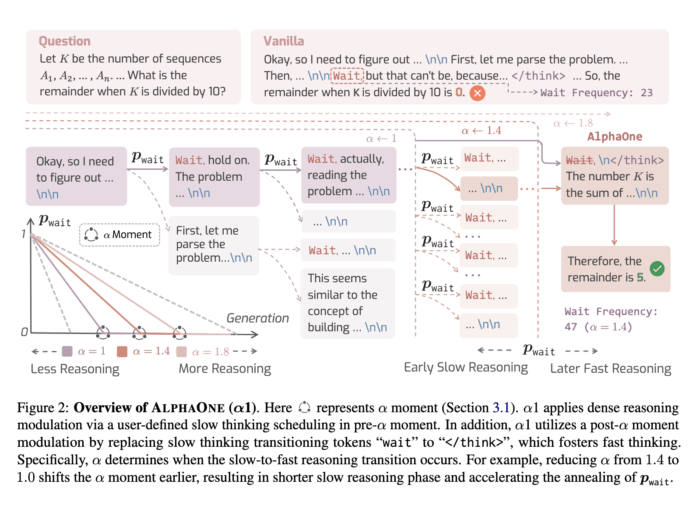

High-Entropy Token Selection in Reinforcement Learning with Verifiable Rewards (RLVR) Improves...

Large Language Models (LLMs) generate step-by-step responses known as Chain-of-Thoughts (CoTs), where each token contributes to a coherent and logical narrative. To improve the quality of reasoning, various reinforcement learning techniques have been employed. These methods allow the model to learn from feedback mechanisms by aligning generated outputs with...