News

Featured

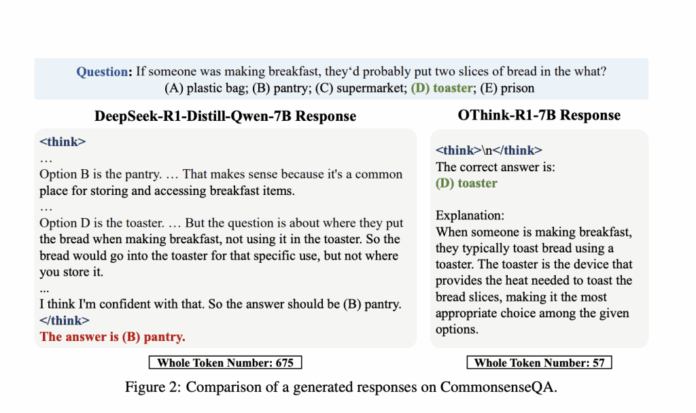

OThink-R1: A Dual-Mode Reasoning Framework to Cut Redundant Computation in LLMs

The Inefficiency of Static Chain-of-Thought Reasoning in LRMs

Recent LRMs achieve top performance by using detailed CoT reasoning to solve complex tasks. However, many simple tasks they handle could be solved by smaller models with fewer tokens, making such elaborate reasoning unnecessary. This echoes human thinking, where we use fast,...