News

Featured

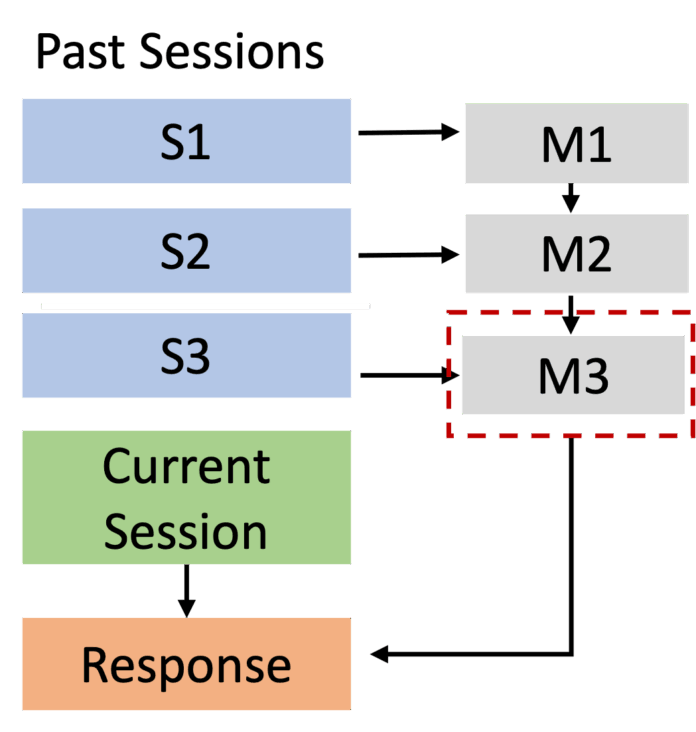

Recursively summarizing enables long-term dialog memory in LLMs

Imagine talking to someone who forgets what you told them ten minutes ago. This is precisely the challenge LLMs face in extended conversations. Despite having large context windows, these models often fail to recall key information from earlier in a conversation, leading to inconsistent and contradictory responses.

This limitation matters...