News

Featured

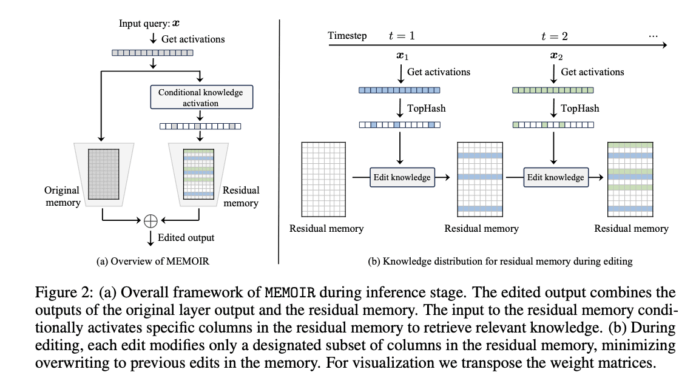

EPFL Researchers Introduce MEMOIR: A Scalable Framework for Lifelong Model Editing...

The Challenge of Updating LLM Knowledge

LLMs have shown outstanding performance for various tasks through extensive pre-training on vast datasets. However, these models frequently generate outdated or inaccurate information and can reflect biases during deployment, so their knowledge needs to be updated continuously. Traditional fine-tuning methods are expensive and susceptible...