News

Featured

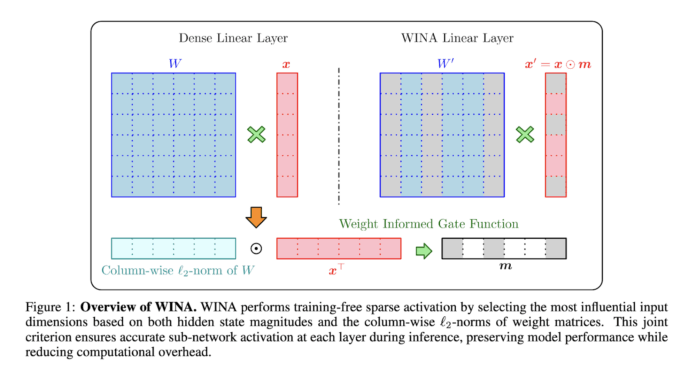

This AI Paper from Microsoft Introduces WINA: A Training-Free Sparse Activation...

Large language models (LLMs), with billions of parameters, power many AI-driven services across industries. However, their massive size and complex architectures make their computational costs during inference a significant challenge. As these models evolve, optimizing the balance between computational efficiency and output quality has become a crucial area of...