News

Featured

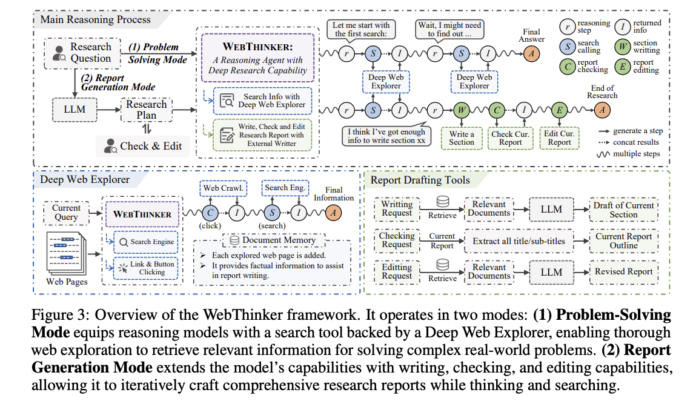

This AI Paper Introduce WebThinker: A Deep Research Agent that Empowers...

Large reasoning models (LRMs) have shown impressive capabilities in mathematics, coding, and scientific reasoning. However, they face significant limitations when addressing complex information research needs when relying solely on internal knowledge. These models struggle with conducting thorough web information retrieval and generating accurate scientific reports through multi-step reasoning processes....