News

Featured

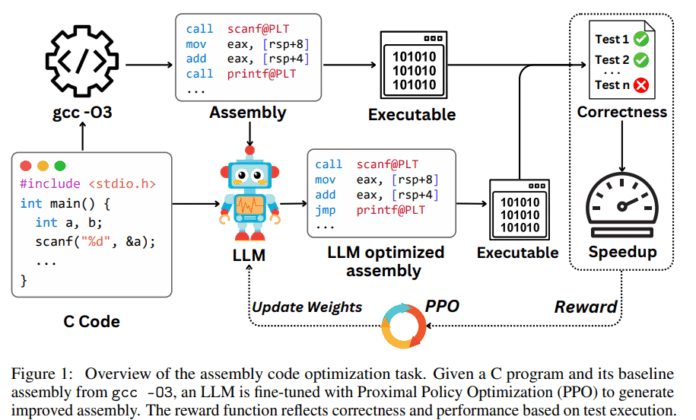

Optimizing Assembly Code with LLMs: Reinforcement Learning Outperforms Traditional Compilers

LLMs have shown impressive capabilities across various programming tasks, yet their potential for program optimization has not been fully explored. While some recent efforts have used LLMs to enhance performance in languages like C++ and Python, the broader application of LLMs to optimize code, especially in low-level programming contexts,...