News

Featured

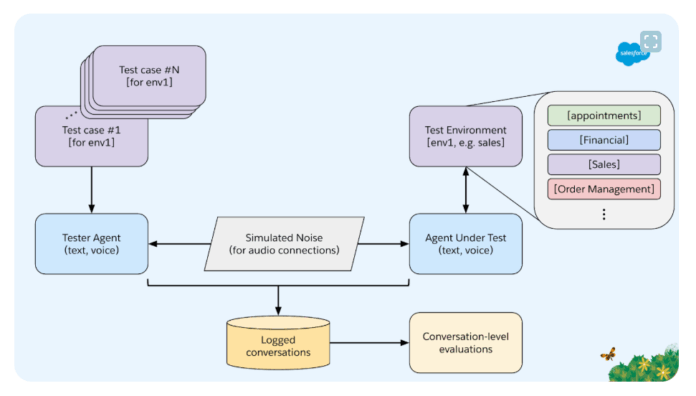

Evaluating Enterprise-Grade AI Assistants: A Benchmark for Complex, Voice-Driven Workflows

As businesses increasingly integrate AI assistants, assessing how effectively these systems perform real-world tasks, particularly through voice-based interactions, is essential. Existing evaluation methods concentrate on broad conversational skills or limited, task-specific tool usage. However, these benchmarks fall short when measuring an AI agent’s ability to manage complex, specialized workflows...