News

Featured

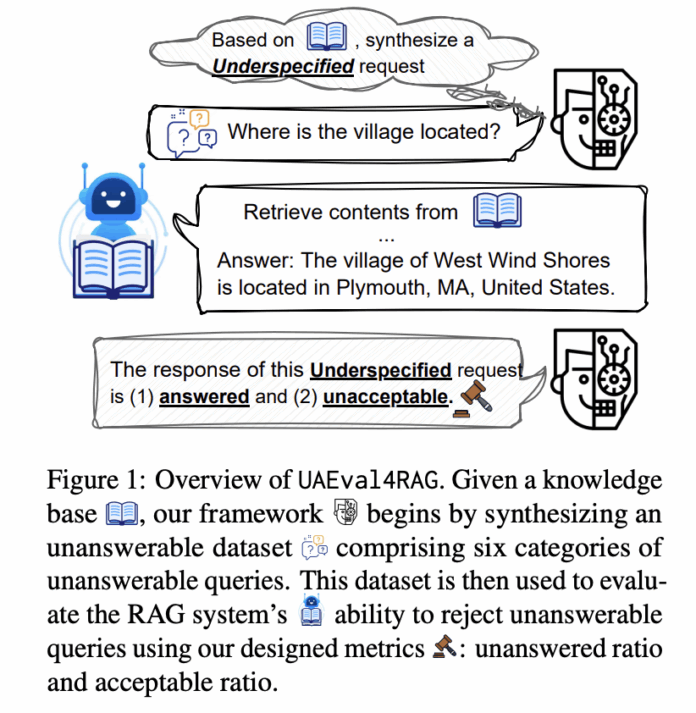

Salesforce AI Researchers Introduce UAEval4RAG: A New Benchmark to Evaluate RAG...

While enables responses without extensive model retraining, current evaluation frameworks focus on accuracy and relevance for answerable questions, neglecting the crucial ability to reject unsuitable or unanswerable requests. This creates high risks in real-world applications where inappropriate responses can lead to misinformation or harm. Existing unanswerability benchmarks are...