OpenAI

Featured

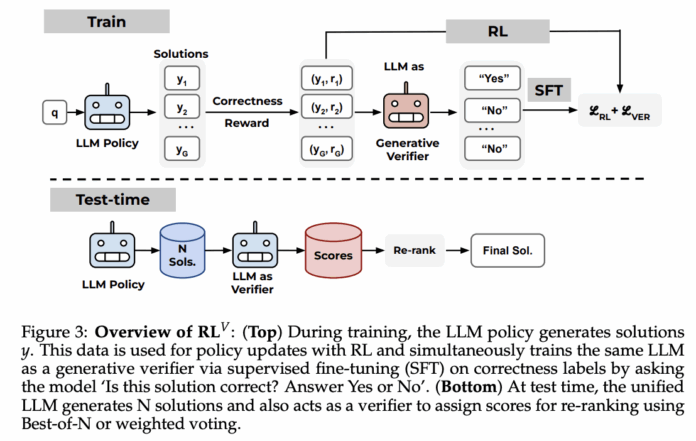

RL^V: Unifying Reasoning and Verification in Language Models through Value-Free Reinforcement...

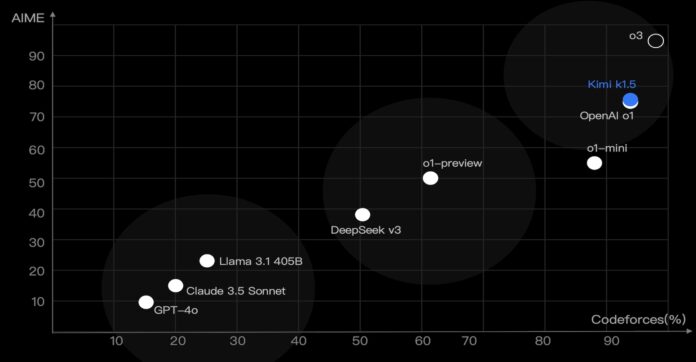

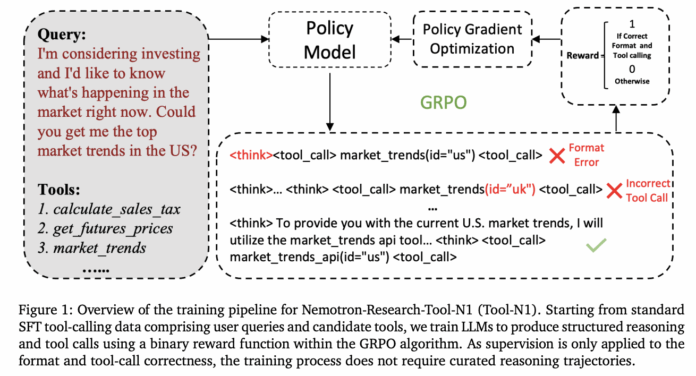

LLMs have gained outstanding reasoning capabilities through reinforcement learning (RL) on correctness rewards. Modern RL algorithms for LLMs, including GRPO, VinePPO, and Leave-one-out PPO, have moved away from traditional PPO approaches by eliminating the learned value function network in favor of empirically estimated returns. This reduces computational demands and...