Anthropic

Featured

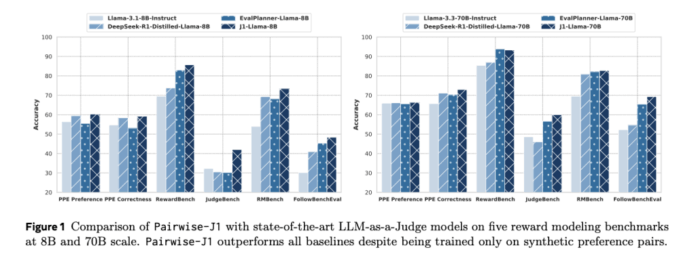

Meta Researchers Introduced J1: A Reinforcement Learning Framework That Trains Language...

Large language models are now being used for evaluation and judgment tasks, extending beyond their traditional role of text generation. This has led to “LLM-as-a-Judge,” where models assess outputs from other language models. Such evaluations are essential in reinforcement learning pipelines, benchmark testing, and system alignment. These judge models...