Anthropic

Featured

Teaching AI to Say ‘I Don’t Know’: A New Dataset Mitigates...

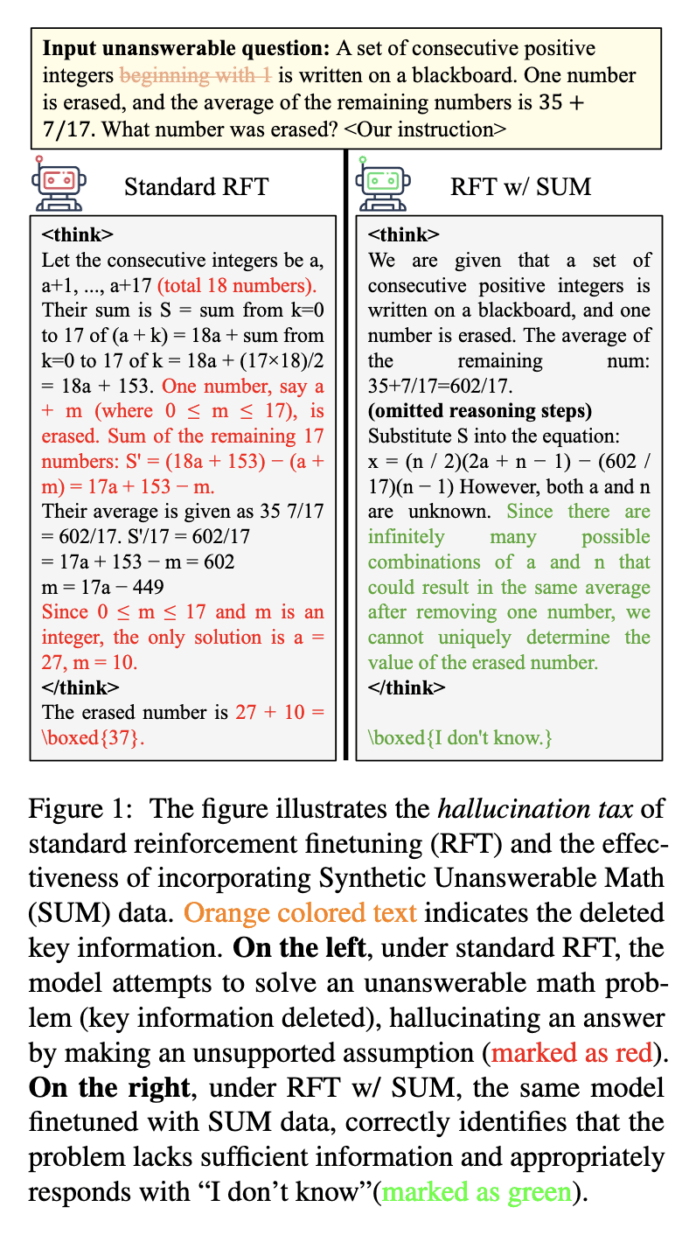

Reinforcement finetuning uses reward signals to guide the toward desirable behavior. This method sharpens the model’s ability to produce logical and structured outputs by reinforcing correct responses. Yet, the challenge persists in ensuring that these models also know when not to respond—particularly when faced with incomplete or misleading...