Benchmarks obviously play a big role in AI research, directing progress and investment decisions. Chatbot Arena is the go-to leaderboard for comparing large language models, but reveals some systematic issues that have created an uneven playing field, potentially undermining scientific integrity and reliable model evaluation.

How Chatbot Arena works and why it matters

Chatbot Arena has become the primary benchmark for evaluating generative AI models. Users submit prompts and judge which of two anonymous models performs better. This human-in-the-loop evaluation has gained prominence because it captures real-world usage better than static academic benchmarks.

The researchers conducted a systematic review of Chatbot Arena, analyzing data from 2 million battles covering 243 models across 42 providers from January 2024 to April 2025. Their findings reveal how certain practices have distorted the leaderboard rankings.

The Arena uses the Bradley-Terry (BT) model to rank participants based on pairwise comparisons. Unlike simple win-rate calculations, BT accounts for opponent strength and provides statistically grounded rankings. However, this approach relies on key assumptions: unbiased sampling, transitivity of rankings (if A beats B and B beats C, then A should beat C), and a sufficiently connected comparison graph.

The private testing advantage

One of the most concerning findings is an undisclosed policy that allows certain providers to test multiple model variants privately before public release, then selectively submit only their best-performing model to the leaderboard.

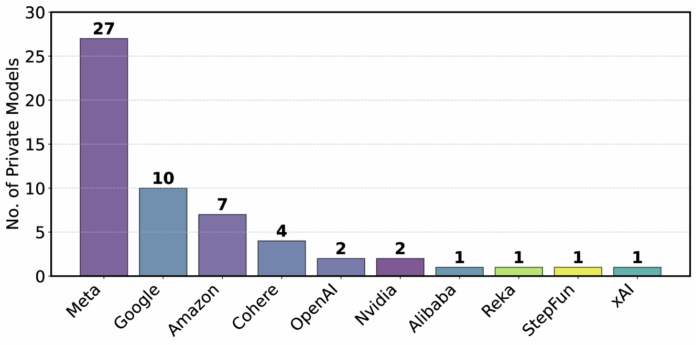

The researchers discovered Meta tested 27 private variants before the Llama-4 release, while Google tested 10 variants. In contrast, smaller companies like Reka had only one active private variant, and academic labs tested no private models during this period.

This “best-of-N” submission strategy violates the BT model’s unbiased sampling assumption. By simulating this effect, the researchers showed that testing just 10 variants yields approximately a 100-point increase in the maximum score identified.

The researchers validated these simulation results through real-world experiments on Chatbot Arena. They submitted two identical checkpoints of Aya-Vision-8B and found they achieved different scores (1052 vs. 1069), with four models positioned between these identical variants on the leaderboard. When testing two different variants of Aya-Vision-32B, they observed even larger score differences (1059 vs. 1097), with nine models positioned between them.

Extreme data access disparities

The research reveals substantial inequalities in access to data from Chatbot Arena battles, stemming from three main factors: