LLM APIs create a significant trust challenge: users pay for access to specific models based on advertised capabilities, but providers might secretly substitute these with cheaper alternatives to save costs. This lack of transparency not only undermines fairness but also erodes trust and complicates reliable benchmarking.

A from UC Berkeley systematically evaluates model substitution detection in LLM APIs. The challenge is complex because users typically interact with models through black-box interfaces, receiving only text outputs and limited metadata. A dishonest provider could replace an expensive model (like Llama-3.1-405B) with a smaller, cheaper one (Llama-3.1-70B) or a quantized version to reduce costs while claiming to provide the premium service.

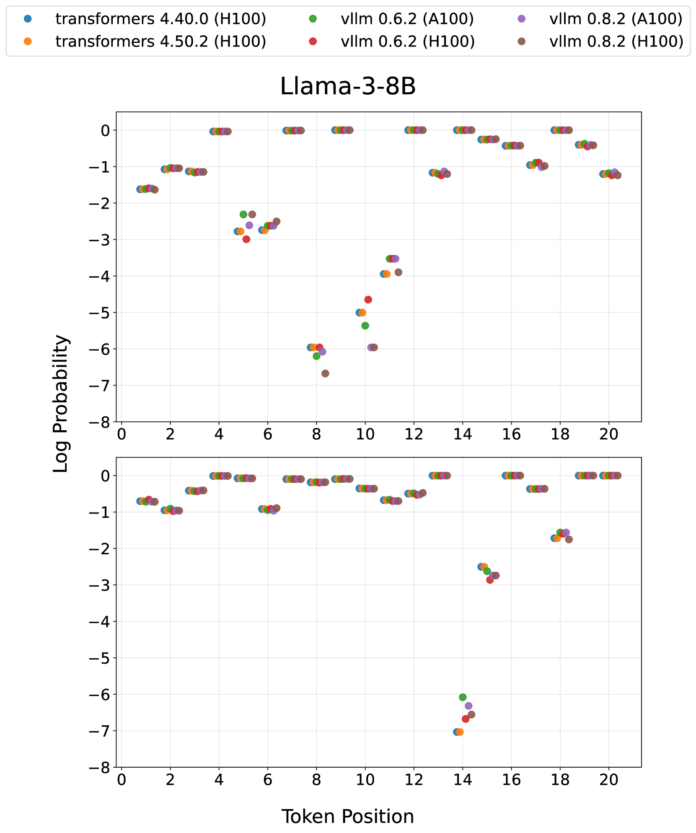

The paper formalizes this verification problem and evaluates various detection techniques against realistic attack scenarios, highlighting the limitations of methods that rely solely on text outputs. While log probability analysis offers stronger guarantees, its accessibility depends entirely on provider transparency. The researchers also examine the potential of hardware-based solutions like Trusted Execution Environments (TEEs) as a more robust verification approach.

Current Approaches to LLM API Verification

Existing research in LLM API auditing has explored several avenues. Some studies have monitored commercial LLM behaviors over time, tracking changes in capabilities and performance drift. For instance, Chen et al. (2023) documented behavioral changes in ChatGPT, while Eyuboglu et al. (2024) characterized updates to API-accessed ML models.