In this tutorial, we’ll learn how to leverage the framework to build a modular active learning pipeline for medical symptom classification. We begin by installing and verifying Adala alongside required dependencies, then integrate Google Gemini as a custom annotator to categorize symptoms into predefined medical domains. Through a simple three-iteration active learning loop, prioritizing critical symptoms such as chest pain, we’ll see how to select, annotate, and visualize classification confidence, gaining practical insights into model behavior and Adala’s extensible architecture.

!pip install -q git+https://github.com/HumanSignal/Adala.git

!pip list | grep adalaWe install the latest Adala release directly from its GitHub repository. At the same time, the subsequent pip list | grep adala command scans your environment’s package list for any entries containing “adala,” providing a quick confirmation that the library was installed successfully.

import sys

import os

print("Python path:", sys.path)

print("Checking if adala is in installed packages...")

!find /usr/local -name "*adala*" -type d | grep -v "__pycache__"

!git clone https://github.com/HumanSignal/Adala.git

!ls -la AdalaWe print out your current Python module search paths and then search the /usr/local directory for any installed “adala” folders (excluding __pycache__) to verify the package is available. Next, it clones the Adala GitHub repository into your working directory and lists its contents so you can confirm that all source files have been fetched correctly.

import sys

sys.path.append('/content/Adala')By appending the cloned Adala folder to sys.path, we’re telling Python to treat /content/Adala as an importable package directory. This ensures that subsequent import Adala… statements will load directly from your local clone rather than (or in addition to) any installed version.

!pip install -q google-generativeai pandas matplotlib

import google.generativeai as genai

import pandas as pd

import json

import re

import numpy as np

import matplotlib.pyplot as plt

from getpass import getpassWe install the Google Generative AI SDK alongside data-analysis and plotting libraries (pandas and matplotlib), then import key modules, genai for interacting with Gemini, pandas for tabular data, json and re for parsing, numpy for numerical operations, matplotlib.pyplot for visualization, and getpass to prompt the user for their API key securely.

try:

from Adala.adala.annotators.base import BaseAnnotator

from Adala.adala.strategies.random_strategy import RandomStrategy

from Adala.adala.utils.custom_types import TextSample, LabeledSample

print("Successfully imported Adala components")

except Exception as e:

print(f"Error importing: {e}")

print("Falling back to simplified implementation...")This try/except block attempts to load Adala’s core classes, BaseAnnotator, RandomStrategy, TextSample, and LabeledSample so that we can leverage its built-in annotators and sampling strategies. On success, it confirms that the Adala components are available; if any import fails, it catches the error, prints the exception message, and gracefully falls back to a simpler implementation.

GEMINI_API_KEY = getpass("Enter your Gemini API Key: ")

genai.configure(api_key=GEMINI_API_KEY)We securely prompt you to enter your Gemini API key without echoing it to the notebook. Then we configure the Google Generative AI client (genai) with that key to authenticate all subsequent calls.

CATEGORIES = ["Cardiovascular", "Respiratory", "Gastrointestinal", "Neurological"]

class GeminiAnnotator:

def __init__(self, model_name="models/gemini-2.0-flash-lite", categories=None):

self.model = genai.GenerativeModel(model_name=model_name,

generation_config={"temperature": 0.1})

self.categories = categories

def annotate(self, samples):

results = []

for sample in samples:

prompt = f"""Classify this medical symptom into one of these categories:

{', '.join(self.categories)}.

Return JSON format: {{"category": "selected_category",

"confidence": 0.XX, "explanation": "brief_reason"}}

SYMPTOM: {sample.text}"""

try:

response = self.model.generate_content(prompt).text

json_match = re.search(r'({.*})', response, re.DOTALL)

result = json.loads(json_match.group(1) if json_match else response)

labeled_sample = type('LabeledSample', (), {

'text': sample.text,

'labels': result["category"],

'metadata': {

"confidence": result["confidence"],

"explanation": result["explanation"]

}

})

except Exception as e:

labeled_sample = type('LabeledSample', (), {

'text': sample.text,

'labels': "unknown",

'metadata': {"error": str(e)}

})

results.append(labeled_sample)

return resultsWe define a list of medical categories and implement a GeminiAnnotator class that wraps Google Gemini’s generative model for symptom classification. In its annotate method, it builds a JSON-returning prompt for each text sample, parses the model’s response into a structured label, confidence score, and explanation, and wraps those into lightweight LabeledSample objects, falling back to an “unknown” label if any errors occur.

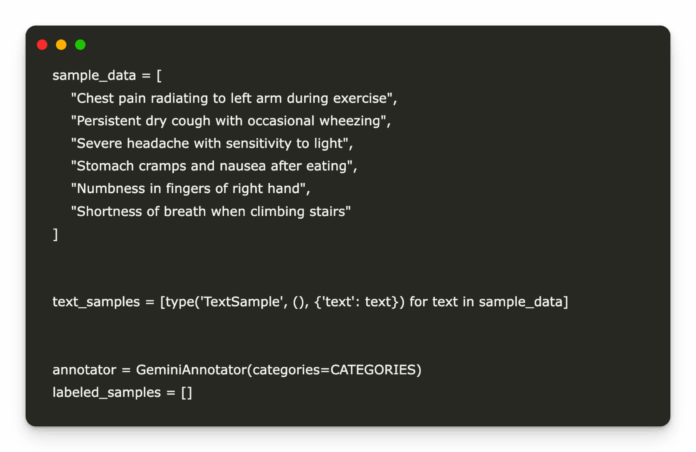

sample_data = [

"Chest pain radiating to left arm during exercise",

"Persistent dry cough with occasional wheezing",

"Severe headache with sensitivity to light",

"Stomach cramps and nausea after eating",

"Numbness in fingers of right hand",

"Shortness of breath when climbing stairs"

]

text_samples = [type('TextSample', (), {'text': text}) for text in sample_data]

annotator = GeminiAnnotator(categories=CATEGORIES)

labeled_samples = []We define a list of raw symptom strings and wrap each in a lightweight TextSample object to pass them to the annotator. It then instantiates your GeminiAnnotator with the predefined category set and prepares an empty labeled_samples list to store the results of the upcoming annotation iterations.

print("nRunning Active Learning Loop:")

for i in range(3):

print(f"n--- Iteration {i+1} ---")

remaining = [s for s in text_samples if s not in [getattr(l, '_sample', l) for l in labeled_samples]]

if not remaining:

break

scores = np.zeros(len(remaining))

for j, sample in enumerate(remaining):

scores[j] = 0.1

if any(term in sample.text.lower() for term in ["chest", "heart", "pain"]):

scores[j] += 0.5

selected_idx = np.argmax(scores)

selected = [remaining[selected_idx]]

newly_labeled = annotator.annotate(selected)

for sample in newly_labeled:

sample._sample = selected[0]

labeled_samples.extend(newly_labeled)

latest = labeled_samples[-1]

print(f"Text: {latest.text}")

print(f"Category: {latest.labels}")

print(f"Confidence: {latest.metadata.get('confidence', 0)}")

print(f"Explanation: {latest.metadata.get('explanation', '')[:100]}...")This active‐learning loop runs for three iterations, each time filtering out already‐labeled samples and assigning a base score of 0.1—boosted by 0.5 for keywords like “chest,” “heart,” or “pain”—to prioritize critical symptoms. It then selects the highest‐scoring sample, invokes the GeminiAnnotator to generate a category, confidence, and explanation, and prints those details for review.

categories = [s.labels for s in labeled_samples]

confidence = [s.metadata.get("confidence", 0) for s in labeled_samples]

plt.figure(figsize=(10, 5))

plt.bar(range(len(categories)), confidence, color='skyblue')

plt.xticks(range(len(categories)), categories, rotation=45)

plt.title('Classification Confidence by Category')

plt.tight_layout()

plt.show()Finally, we extract the predicted category labels and their confidence scores and use Matplotlib to plot a vertical bar chart, where each bar’s height reflects the model’s confidence in that category. The category names are rotated for readability, a title is added, and tight_layout() ensures the chart elements are neatly arranged before display.

In conclusion, by combining Adala’s plug-and-play annotators and sampling strategies with the generative power of Google Gemini, we’ve constructed a streamlined workflow that iteratively improves annotation quality on medical text. This tutorial walked you through installation, setup, and a bespoke GeminiAnnotator, and demonstrated how to implement priority-based sampling and confidence visualization. With this foundation, you can easily swap in other models, expand your category set, or integrate more advanced active learning strategies to tackle larger and more complex annotation tasks.

Check out . All credit for this research goes to the researchers of this project. Also, feel free to follow us on and don’t forget to join our .

Here’s a brief overview of what we’re building at Marktechpost:

- ML News Community – (92k+ members)

- Newsletter– (30k+ subscribers)

- miniCON AI Events –

- AI Reports & Magazines –

- AI Dev & Research News – (1M+ monthly readers)