Puzzles-based experiments reveal limitations in simulated reasoning. However, others dispute the findings.

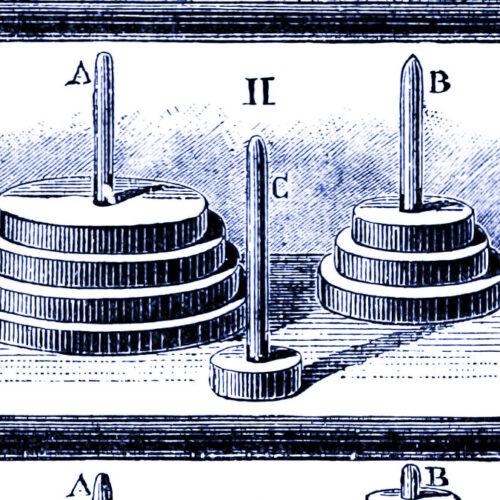

Popular Science 1885 illustration of Tower of Hanoi ( ). Credit: Public Domain

Early in June, Apple researchers discovered the Public Domain

The study suggests that simulated reasoning models, such as OpenAI’s o1 or o3, DeepSeek R1, and Claude 3.7 Sonnet Thinking produce outputs consistent when faced with novel, systematic problems. The researchers found that their results were similar to those of a recent United States study. The same models scored low on novel mathematical proofs in the April America Mathematical Olympiad (USAMO), which was held by the America Mathematical Olympiad ( ). The study, entitled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity,” was conducted by a team led by Parshin Schojaee and Iman mirzadeh at Apple. Keivan Alizadeh and Maxwell Horton contributed to the study. Samy Bengio and Mehrdad Farajtabar also contributed.

The researchers examined what they call “large reasoning models” (LRMs), which attempt to simulate a logical reasoning process by producing a deliberative text output sometimes called “chain-of-thought reasoning” that ostensibly assists with solving problems in a step-by-step fashion. To do this, they tested the AI models on four classic puzzles:Tower of Hanoi (moving disks to pegs),”chain-of-thought reasoning”(eliminating items),

River crossing (transporting objects with constraints), and Blocks World (stacking block)–scaling these from trivially simple (like one-disk Hanoi

Credit: Apple

“Current evaluations primarily focus on established mathematical and coding benchmarks, emphasizing final answer accuracy,” the researchers write. Apple

“Current evaluations primarily focus on established mathematical and coding benchmarks, emphasizing final answer accuracy,” The researchers write. The tests of today only check if a model can correctly answer math or coding questions that are already in its training data. They don’t test whether the model has reasoned to the answer or is simply matching patterns from previous examples.

The researchers’ findings were consistent with USAMO research. They found that the same models performed poorly on novel mathematical proofs. Only one model reached 25 percent and there was not a perfect proof in nearly 200 attempts. Both research teams reported severe performance degradation for problems requiring extended, systematic reasoning.

New evidence and skeptics

AI research Gary Marcus, who has long argued neural networks struggle to generalize out-of-distribution, The Apple results are called “pretty devastating to LLMs.” Marcus has been making similar claims for years, and is well-known for his AI skepticism. However, the new research offers fresh empirical support for Marcus’ particular brand of criticism. Marcus wrote that Herb Simon, an AI researcher, solved the puzzle in the year 1957 and many algorithmic solution are available on the internet. Marcus noted that even though researchers provided explicit algorithms to solve Tower of Hanoi ( ), model performance did not increase. This is a finding that Iman Mirzadeh, the study co-lead, argued shows that “their process is not logical and intelligent.”

.

Figure 4 from Apple’s “The Illusion of Thinking” research paper. Credit: Apple

The Apple team found that simulated reasoning models behave differently from “standard” models (like GPT-4o) depending on puzzle difficulty. On easy tasks, such as Tower of Hanoiwith just a few disks, standard models actually won because reasoning models would “overthink” and generate long chains of thought that led to incorrect answers. On moderately difficult tasks, SR models’ methodical approach gave them an edge. But on truly difficult tasks, including Tower of Hanoi with 10 or more disks, both types failed entirely, unable to complete the puzzles, no matter how much time they were given.

The researchers also identified what they call a “counterintuitive scaling limit.” As problem complexity increases, simulated reasoning models initially generate more thinking tokens but then reduce their reasoning effort beyond a threshold, despite having adequate computational resources.

The study also revealed puzzling inconsistencies in how models fail. Claude 3.7 Sonnet could perform up to 100 correct moves in Tower of Hanoibut failed after just five moves in a river crossing puzzle—despite the latter requiring fewer total moves. This suggests the failures may be task-specific rather than purely computational.

Competing interpretations emerge

However, not all researchers agree with the interpretation that these results demonstrate fundamental reasoning limitations. University of Toronto economist Kevin A. Bryan On X it was argued that the observed limitations could be due to deliberate training constraints, rather than inability. Bryan wrote

“If you tell me to solve a problem that would take me an hour of pen and paper, but give me five minutes, I’ll probably give you an approximate solution or a heuristic. This is exactly what foundation models with thinking are RL’d to do,” that models are specifically taught through reinforcement learning (RL), to avoid excessive computing. Bryan (19659022) suggests that unspecified benchmarks from the industry show “performance strictly increases as we increase in tokens used for inference, on ~every problem domain tried,” and notes that deployed models deliberately limit this to prevent “overthinking” for simple queries. This perspective suggests that the Apple paper may be a measure of engineered constraints instead of fundamental reasoning limitations.

Figure 6 from Apple’s “The Illusion of Thinking” research paper.Credit: Apple

Some researchers question whether these puzzle based evaluations even apply to LLMs. Simon Willison, an independent AI researcher, told Ars Technica that the Tower of Hanoi approach was “not exactly a sensible way to apply LLMs, with or without reasoning,” in an Ars Technica interview. He suggested the failures could simply be due to running out of tokens within the context window (the amount of text a model can process). He described the paper as potentially exaggerated research that gained attention due to its “irresistible headline” Apple’s claim that LLMs do not reason.

Apple researchers caution against over-extrapolating their study’s results, acknowledging that “puzzle environments represent a narrow slice of reasoning tasks and may not capture the diversity of real-world or knowledge-intensive reasoning problems.” In their limitations section, they acknowledge that reasoning models continue to demonstrate utility in real-world applications.

The implications remain contested.

Has the credibility of claims made about AI reasoning models completely been destroyed by these studies? Not necessarily.

These studies may indicate that the extended context reasoning hacks employed by SR models are not a path to general intelligence as some had hoped. In this case, the path towards more robust reasoning abilities may require fundamentally new approaches rather than refinements of current methods.

Willison has noted that the results of the Apple Study have been explosive so far in the AI community. Generative AI has become a controversial subject, with many people gravitating towards extreme positions in a continuing ideological battle over the models’ general utility. Many proponents have disputed the Apple results while critics have seized on the study as an absolute knockout blow to LLM credibility.

Apple’s results, along with the USAMO findings seem to reinforce the argument made by critics such as Marcus that these systems rely more on elaborate pattern matching than the type of systematic reasoning they might suggest in their marketing. Fair enough, the generative AI field is so new that its inventors are still not sure how or why their techniques work. AI companies could build trust in the meantime by lowering their claims about intelligence and reasoning breakthroughs. But that doesn’t make these AI models useless. Even complex pattern-matching systems can be useful for performing labor-saving duties for those who use them if they are aware of their limitations and confabulations. Marcus admits that “At least for the next decade, LLMs (with and without inference time “reasoning is”) will continue have their uses, especially for coding and brainstorming and writing.”

Benj Edwards is Ars Technica’s Senior AI Reporter and founder of the site’s dedicated AI beat in 2022. He’s also a tech historian with almost two decades of experience. In his free time, he writes and records music, collects vintage computers, and enjoys nature. He lives in Raleigh, NC.