Contrastive Language-Image Pre-training (CLIP) models have become fundamental components in modern vision-language systems. As these models are increasingly integrated into large vision-language models (VLMs), their vulnerability to adversarial attacks has emerged as a critical security concern.

While previous research has focused on creating sample-specific perturbations or limited universal attacks, a comprehensive understanding of “super transferability” — where a single perturbation works across different data samples, domains, models, and tasks — has remained unexplored until now.

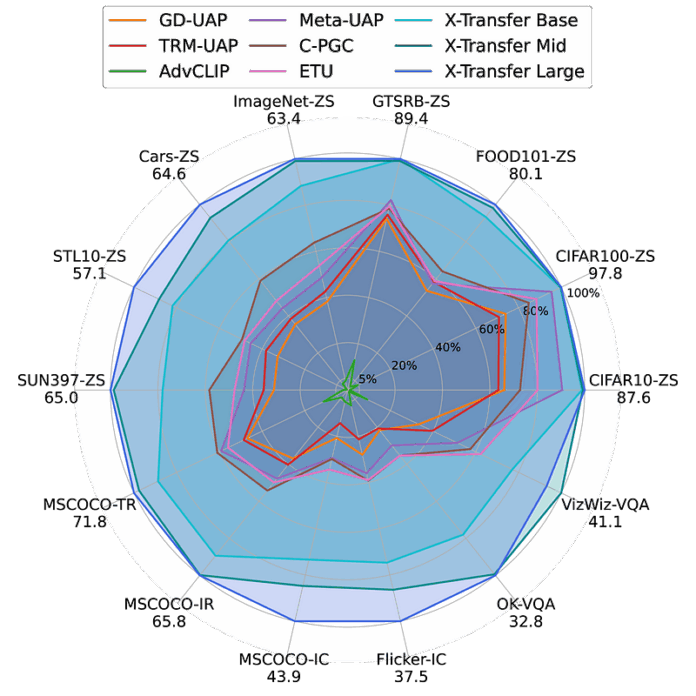

X-Transfer, introduced in a , generates Universal Adversarial Perturbations (UAPs) that expose a fundamental vulnerability in CLIP models. Unlike existing methods that use fixed surrogate models, X-Transfer employs an efficient “surrogate scaling” strategy that dynamically selects suitable surrogate models from a large pool, making it both more effective and computationally efficient.

What makes this research particularly significant is the demonstration that a single perturbation can simultaneously transfer across data, domains, models, and tasks — a property defined as “super transferability.” This capability poses a new safety risk for vision-language systems and calls for more robust defense mechanisms.

Understanding the Landscape of CLIP and Adversarial Attacks

CLIP has revolutionized vision-language learning by pre-training on web-scale text-image pairs through contrastive learning. Its strong generalization capabilities have made it the backbone for numerous VLMs, including Flamingo, LLaVA, BLIP2, and MiniGPT-4.

Adversarial attacks against neural networks fall into two main categories: white-box attacks, where the attacker has complete knowledge of the victim model, and black-box attacks, where this information is unavailable. , a type of black-box attack, are particularly concerning due to their practicality — they don’t require suspicious queries to the victim model.

Previous work on adversarial attacks against CLIP has primarily focused on sample-specific perturbations, which are tailored to individual images. Universal Adversarial Perturbations (UAPs), in contrast, can fool models across different samples.

Recent research has introduced UAPs against CLIP encoders, including AdvCLIP, ETU, and C-PGC. However, none has achieved comprehensive super transferability — the ability to transfer across data, domains, models, and tasks simultaneously. This capability is the primary focus and contribution of X-Transfer.

The mechanics of X-Transfer: creating super transferable attacks

To understand how X-Transfer works, we first need to understand CLIP’s training objective. CLIP learns a joint embedding space for images and text through contrastive learning. An image encoder and a text encoder project both modalities into this shared space, where related image-text pairs are pushed closer together while unrelated pairs are pushed apart.