Large language models (LLMs) like and have achieved remarkable progress in complex reasoning tasks, reaching human-expert levels in logic and mathematical problem-solving. However, extending these capabilities to multimodal contexts presents substantial challenges. Vision-language models (VLMs) excel at descriptive tasks but struggle with deeply logical multimodal reasoning tasks like geometric proofs or scientific problem-solving.

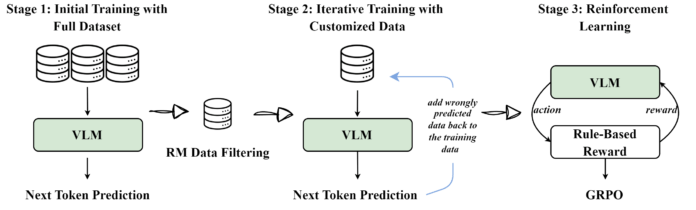

Researchers from Skywork AI have introduced Skywork R1V, a multimodal reasoning model that efficiently transfers the reasoning capabilities of the to the visual domain. This is accomplished through three key innovations: an efficient multimodal transfer method using a lightweight visual projector, a hybrid optimization framework that combines iterative training approaches, and an adaptive-length chain-of-thought distillation technique that dynamically optimizes reasoning chain lengths.

With only 38B parameters, Skywork R1V achieves competitive performance compared to much larger models, scoring 69.0 on the MMMU benchmark and 67.5 on MathVista, while maintaining robust textual reasoning abilities with 72.0 on AIME and 94.0 on MATH500. The model has been fully open-sourced to foster broader research and innovation in multimodal reasoning.

How Skywork R1V Works: A Technical Overview

Building Multimodal Reasoning: Efficient Transfer Approach

Directly connecting a reasoning-capable language model to a vision backbone would require extensive multimodal reasoning data to simultaneously align visual-language representations and preserve reasoning capabilities. The researchers propose an Efficient Multimodal Transfer method that decouples these objectives.

Instead of a direct connection, they adopt a staged strategy: