On February 2, at Nvidia GTC 2025 in San Jose, California CEO Jensen Huang announced the following: He also revealed a number of new AI-accelerating GPUs that the company plans to release in the months and years ahead. He also revealed additional specifications about previously announced chips.

Vera Rubin was the centerpiece announcement, first teased in Computex 2024. She is now scheduled to be released in the second half 2026. This GPU named after an astronomerwill have 288 gigabytes and a custom-designed CPU by Nvidia called Vera. Nvidia claims that Vera Rubin is a significant improvement over Grace Blackwell in terms of performance, especially for AI training and analysis. Jensen Huang presented specifications for Vera Rubin during his GTC keynote.

Vera Rubin has two GPUs on one die, delivering 50 petaflops per chip of FP4 inference. The system can deliver 3.6 exaflops for FP4 inference computation when configured in an NVL144 rack. This is 3.3 times faster than Blackwell Ultra, which delivers 1.1 exaflops with a similar rack.

Vera CPU has 88 custom ARM Cores with 176 Threads connected to Rubin graphics via a high speed NVLink interface of 1.8 TB/s.

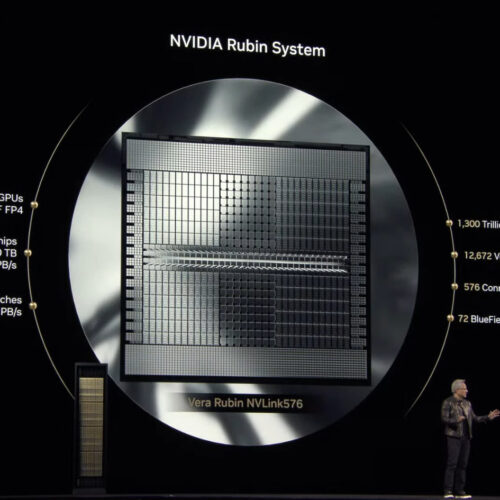

Huang announced Rubin Ultra which will be released in the second half 2027. Rubin Ultra will feature four reticle-sized GPUs and the NVL576 configuration, delivering 100 petaflops per chip of FP4 precision. (FP4 is a 4-bit floating point format used to represent and process numbers within AI models).

Rubin Ultra’s rack configuration will deliver 15 exaflops for FP4 inference computation and 5 exaflops for FP8 training–approximately four times as powerful as the Rubin NVL144. Each Rubin Ultra GPU includes 1TB of HBM4e Memory, with a total of 365TB fast memory.