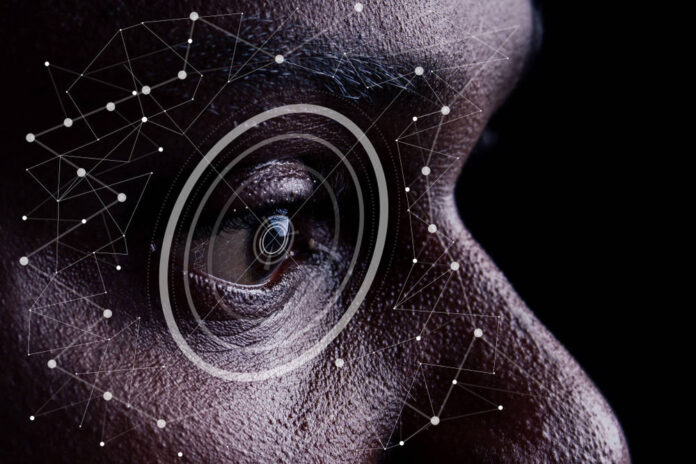

The police in Cleveland, Ohio could have a murder case collapse because they relied on AI facial recognition software for a search warrant.

Despite a warning from the developer of the facial recognition software, Clearview AI, that the results should not be relied upon as the sole basis for arrests because they may not be accurate.

According to The Cleveland Plain Dealer and its associated website Cleveland.com police investigating the murder of Blake Story in February 2024 obtained video surveillance which captured the victim being fired at by an unknown person. Clearview was unable to identify the killer because his face wasn’t visible on the footage from February 14.

Undeterred by the lack of facial recognition, the cops used Clearview to identify a man in a separate video surveillance taken days later on 20th February 2024. The police of the US city figured out that the person in the video footage on February 14 may have been the shooter based on his clothing and walking style. They obtained a warrant for the computer-identified individual.

The police executed a search warrant at the residence of the suspect’s partner and found a gun that they believe to be the murder weapon. Other evidence was obtained, including clothing and statement.

On January 9, 2025 the judge in Cuyahoga, Ohio, who presided over the case, granted the defense’s motion to exclude evidence because the court was not adequately informed about the investigation, including the use of facial recognition by Clearview AI.

Brian M. Fallon, the defense attorney, cited a police report that stated officers had asked the Northeast Ohio Regional Fusion Center (an interagency law-enforcement intelligence group) to identify someone on the February 20, surveillance video in order to obtain his address. The center produced a document identifying the person in that footage, but not the footage from the actual murder. Fallon’s motion to suppress also states that the identification was made using AI, with warning labels on it: “The report from the Fusion center is from Clearview AI, an artificial intelligence facial recognition software company. This company has such faith in its software that it prints the following on every page of its report…” This paragraph is:

The Motion goes on to cite an even more extensive disclaimer on Clearview AI’s website. It includes: He said that the wording in the police affidavit does not make it clear that the video in which the suspect’s identity was determined was captured six day after the video of the murder. It also doesn’t establish the defendant identified by Clearview AI’s software on the latter video was present in the recording of that killing. The police affidavit

links the defendant identified in the video by Clearview AI on February 20 to the unidentified person in the video of the killing on February 14 by noting that the defendant has “the same build, hair style, clothing, and walking characteristics as observed on the suspect on February 14, 2024.”

Cleveland Police Department did not immediately respond to a comment request. According to The Cleveland Plain Dealer a country prosecutor claimed that police did use AI in their case.

- American police are using AI to draft reports, and the ACLU doesn’t like it

- US Police have run nearly 1M Clearview AI search, says the founder

- They love facial recognition and withholding information on its use from courts

- We are back with AI crime prediction in policing.

Fallon said that the real issue is that the prosecutor has an obligation to disclose relevant details to the judge, such as

Fallon said that he requested a Daubert Hearing, referring to the US Supreme Court decision which allows an expert to challenge the admissibility testimony. He said the homicide unit doesn’t know about the technology and isn’t properly trained. He said that there are still no rules in Ohio for the use of AI facial recognition.

This tells me that Clearview does not want to defend its technology in court, he said.

New York’s Clearview did not reply to a comment request. In June 2024, the business struck a deal to resolve a privacy complaint involving allegations of unauthorized image scraping.

We noted in October that police often use facial recognition technology .

After the defense won its motion to exclude evidence, they appealed it. The prosecutors acknowledged that their case was at risk if this ruling is not reversed.

Police arrested the wrong person and now refuse to come to court in order to admit it.

Qeyeon Tolbert (23), who denies any wrongdoing filed his own motion for dismissal on January 14, 2025. This was due to the continuances granted by the prosecution which prevented the case being dismissed. This motion is

“The defendant’s DNA was not found on the scene or on the victim which is hard to fathom when it was supposed to have been a robbery,” .

There are no witnesses who can say that the defendant is the person who did this or has seen the defendant. There was no gunshot residue on the defendant, or any of the items taken in evidence. Shoes taken as evidence did not belong to defendant and could not fit defendant for an unknown reason. The defendant’s phone was not pinged near the crime scene and even the victim family has stated that they have never seen him, proving their lack of familiarity.

“There are no identifying marks on video footage that can be linked to the defendant and there were none of the victim’s belongings found in the defendant’s home. All this goes to the proof that the police arrested the wrong man and now do not want to come to court to admit it.” (r)