What happens when you challenge one of the most basic assumptions in language AI? We’ve spent years making tokenizers bigger and more sophisticated, training them on more data, expanding their vocabularies. But what if that whole approach is fundamentally limiting us?

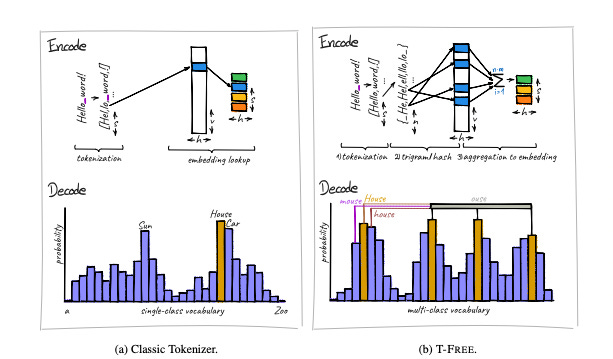

The researchers behind T-FREE, the , ask exactly this. Their answer could change how we build language models. Instead of using a fixed vocabulary of tokens, they show how to map words directly into sparse patterns – and in doing so, cut model size by 85% while matching standard performance.

When I first read about their approach, I was skeptical. Language models have used tokenizers since their inception – it seemed like questioning whether cars need wheels. But as I dug into the paper, I found myself getting increasingly excited. The researchers are showing us how our standard solutions have trapped us in a particular way of thinking, and I think that’s a very refreshing way to look at LLM performance.