Nvidia acquires Run.AI.

Image credit: Run.AI.

Subscribe to our daily and weekly emails for the latest updates on AI. Learn More

Nvidia completed its acquisition Run:ai. The software company makes it easier for users to orchestrate GPU cloud for AI. It also announced that it would open source the software.

Although the purchase price was not disclosed, it was reported to be $700 million at the time Nvidia announced its intention to close this deal in April. Run:ai announced the deal on its website today, and said that Nvidia intends to open-source software. Run:ai software schedules Nvidia GPUs for AI in cloud.

Although neither company has explained why Run:ai is open-sourcing its platform, it’s not difficult to guess. Nvidia’s stock price has risen to $3.56 trillion since it became the world’s leading AI chip maker. It’s a great thing for Nvidia but it also makes it difficult for it to acquire other companies due to antitrust oversight.

In a statement, a spokesperson for Nvidia stated that “We are delighted to welcome the Run :ai team to Nvidia.” It’s possible that the same thing is happening here.

In a press statement, Run:ai’s founders Omri Gelller and Ronen Dar stated that the community will be able to build better AI faster if its software is open-sourced. Geller and Dar stated that while Run:ai only supports Nvidia GPUs at the moment, open-sourcing its software will allow it to be available to the entire AI eco-system.

The founders said that they would continue to help customers get the most from their AI Infrastructure, and offer the ecosystem maximum efficiency, flexibility and utilization for GPU Systems, wherever they may be: on-prem or in the cloud via native solutions, and on Nvidia DGX Cloud co-engineered by leading CSPs.

According to the founders, “True our open-platform philosophy as part of Nvidia we will continue to empower AI teams with the ability to choose the tools platforms and frameworks which best suit their needs.” We will continue to work with the ecosystem and strengthen our partnerships to

provide a wide range of AI solutions and platforms.

Israel-based company stated that its goal was to be the driving force behind the AI revolution

and empower organizations to unlock their AI infrastructures’ full potential.

Over the years, we have achieved milestones which we could not even imagine back then. The founders stated that together, they had built an innovative product, an amazing technology, and a go-to-market engine.

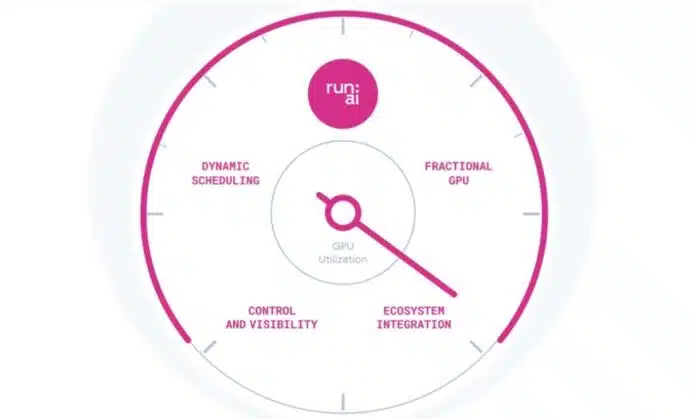

The Run:ai platform helps customers to orchestrate AI Infrastructures, increase efficiency and usage, and boost productivity of their AI Teams.

We are excited to continue this momentum as part of Nvidia. AI and accelerated computation are transforming the global economy at an unprecedented rate, and we think this is only the beginning,” said the Run:ai founding team. “GPUs will continue to be at the forefront in driving these transformative innovations, and joining Nvidia gives us an extraordinary chance to carry on a joint mission in helping humanity solve the greatest challenges of the world.”

Nvidia is a longtime manufacturer of graphics chips. These chips have become more useful in the last few years for running AI software. The company is now focusing on software. This acquisition aims to give customers the maximum flexibility, efficiency, and choice for GPU orchestration. Nvidia has been working with Run:ai since 2020, and they share customers.

TLV Partners lead the seed round of Run:ai for 2018. Rona Segev said in a TLV statement that “the AI market seemed to be a completely different world in early 2018.” OpenAI was a research company, and Nvidia had a market cap of ‘only’ $100 billion. We met Omri, Ronen and they painted a picture of the future of AI. In their vision, AI would be ubiquitous.

Segev continued, “Everyone would be interacting daily with AI, and it would become obvious that every company will be leveraging AI one way or another.” According to them, the only thing stopping that vision from becoming reality was the lack [the] of efficiency and costs associated with training AI and running them in a production environment on multiple GPU clusters. To solve this issue, Omri, Ronen, and Segev pitched the idea of creating a layer of orchestration between AI models and GPUs. This would allow a more efficient use of compute resources, leading to faster training and significantly lower costs.”

Segev added, “Of Course, this was all hypothetical at the time, as they hadn’t even incorporated a business, let alone created a product. We didn’t have much knowledge about the industry. There was something special about Omri & Ronen. They had a unique mixture of intellect, charm and craziness, which created the perfect recipe to support the type of founders that we are looking for.”

Want to impress your boss? VB Daily can help. We provide you with the inside scoop about what companies are doing to maximize ROI, from regulatory changes to practical deployments.

Read our privacy policy

Thank you for subscribing. Click here to view more VB Newsletters.

An error occured.